Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

The Actor Model and Its Properties

The actor model was developed in the 1970s for concurrent and parallel computations. It defines some key rules for how system components should behave and interact with each other. The main idea is that interactions between program components are not conducted through function or procedure calls but through the exchange of asynchronous messages. You can read more about the actor model and its history in the Wikipedia article.

One of the most popular programming languages that uses this model is Erlang, where the actor model is at the core of its BEAM virtual machine. In Erlang, actors are represented by lightweight processes within the virtual machine.

There are also implementations of this model in other languages. For example, in Java, the Akka framework provides a powerful tool for building solutions based on the actor model. Akka brings the principles of the actor model to the Java ecosystem, enabling developers to create highly concurrent, distributed, and fault-tolerant applications.

The Ergo Framework implements the actor model based on lightweight processes. Each process can send and receive asynchronous messages, make synchronous calls, and spawn new processes.

A process in Ergo Framework is a lightweight entity running on top of a goroutine, built around the actor model. Each process has a mailbox for incoming messages. By default, this mailbox is of unlimited size, but it can be limited by setting an appropriate parameter when the process is started. The mailbox contains four queues: Main, System, Urgent, and Log. These queues determine the priority of message processing.

An actor in Ergo Framework is an abstraction over such a process, with a set of callbacks for handling incoming messages. The standard library includes general-purpose actors like act.Actor, act.Supervisor, and act.Pool, as well as a specialized actor for handling HTTP requests, act.WebHandler.

Mailbox processing in an actor is done sequentially in a FIFO order, but with respect to priority. Messages from the Urgent queue are processed first, followed by those in the System queue. If these queues are empty, messages from the Main queue are processed. Typically, messages from the node (e.g., a request to stop the process) are placed in the Urgent and System queues. All other messages are delivered to the Main queue by default. Messages in the Log queue are processed with the lowest priority.

Ergo Framework - is an implementation of ideas, technologies, and design patterns from the Erlang world in the Go programming language. It is built on the actor model, network transparency, and a set of ready-to-use components for development. This makes it significantly easier to create complex and distributed solutions while maintaining a high level of reliability and performance.

The management of starting and stopping processes (actors), as well as routing messages between them, is handled by the node. The node is also responsible for network communication. If a message is sent to a remote process, the node automatically establishes a network connection with the remote node where the process is running, thus ensuring network transparency.

Actors in Ergo Framework are lightweight processes that live on top of goroutines. Interaction between processes occurs through message passing. Each process has a mailbox with multiple queues to prioritize message handling. Actors can exchange asynchronous messages and also make synchronous requests to each other.

Each node has a built-in function. Typically, the first node launched on a host becomes the registrar for other nodes running on the same host. Upon registration, each node reports its name, the port number for incoming connections, and a set of additional parameters.

in Ergo Framework is achieved through the ENP (Ergo Network Protocol) and EDF (Ergo Data Format). These are based on ideas from Erlang's network stack but are not compatible with it. Therefore, to communicate with Erlang nodes, an additional Erlang package must be used.

To maximize performance in network message exchange, Ergo Framework uses a pool of multiple TCP connections combined into a single logical network connection.

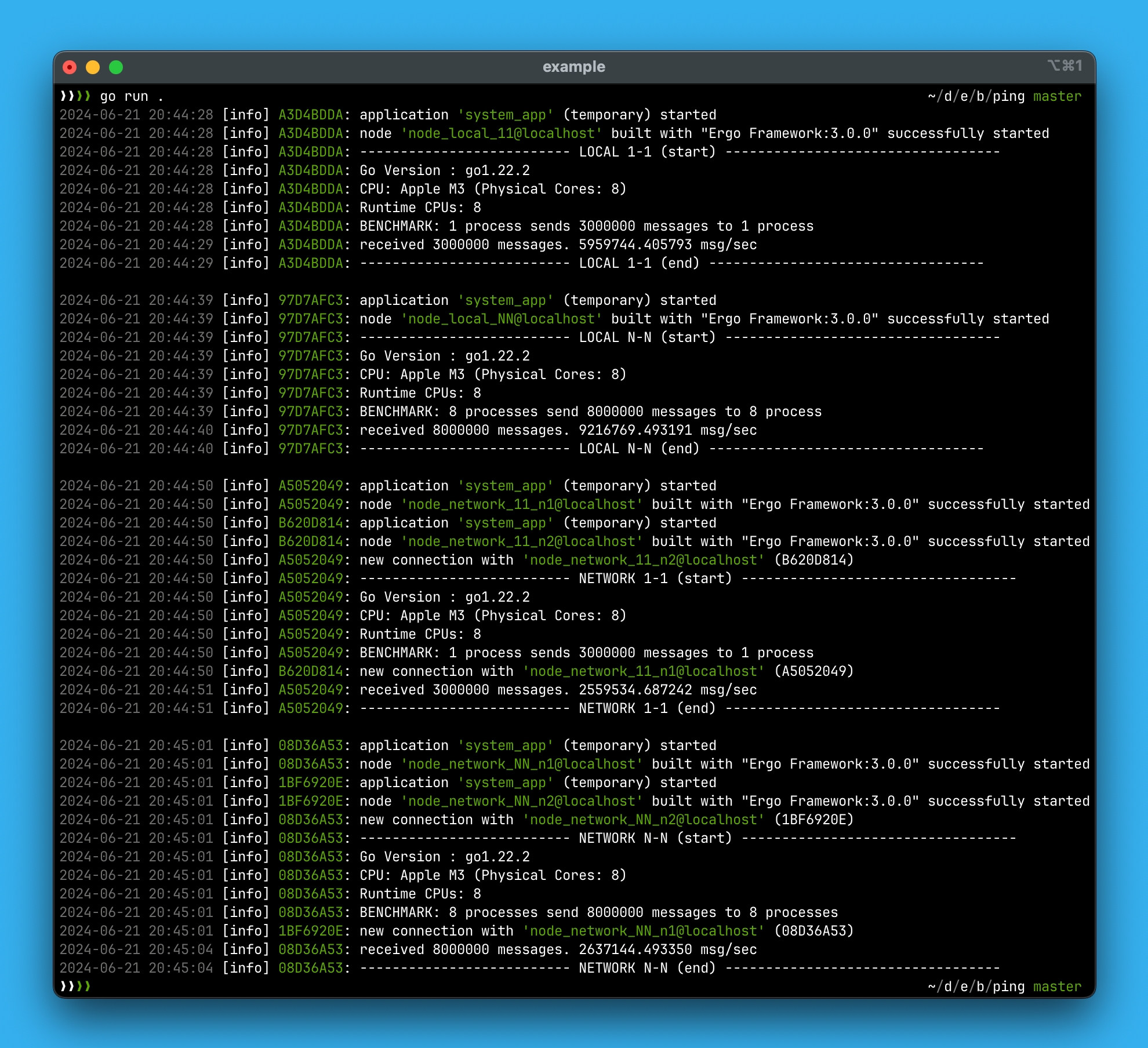

Ergo Framework demonstrates high performance in local message exchange due to the use of lock-free queues within the process mailbox and the efficient use of goroutines to handle the process itself. A goroutine is only activated when the process receives a message; otherwise, the process remains idle and does not consume CPU resources.

You can evaluate the performance yourself using the provided benchmarks .

In the development of Ergo Framework, we adhere to the concept of zero dependencies. All functionality is implemented using only the standard Go library. The only requirement for using Ergo Framework is the version of the Go language. Starting from version 3.0, Ergo Framework depends on Go 1.20 or higher.

process control

To create fault-tolerant applications, Ergo Framework introduces a process structuring model. The core idea of this model is to divide processes into two types:

worker

supervisor

Worker processes perform computations, while supervisors are responsible solely for managing worker processes.

In Ergo Framework, worker processes are actors based on . Supervisors, on the other hand, are actors based on . The role of act.Supervisor is to start child processes and restart them according to the chosen restart strategy. Several

act.Actor but also a supervisor based on act.Supervisor. This allows you to form a hierarchical structure for managing processes. Thanks to this approach, your solution becomes more reliable and fault-tolerant.

The Ergo Framework allows nodes to run with various network stacks. You can replace the default network stack or add it as an additional stack. For more information, refer to the Network Stack section.

This library contains implementations of network stacks that are not part of the standard Ergo Framework library.

An extra library of logger implementations that are not included in the standard Ergo Framework library. This library contains packages with a narrow specialization. It also includes packages that have external dependencies, as Ergo Framework adheres to a "zero dependency" policy

An extra library of meta-process implementations not included in the standard Ergo Framework library. This library contains packages with a narrow specialization. It also includes packages with external dependencies, as Ergo Framework adheres to a "zero dependency" policy.

Data Types and Interfaces Used in Ergo Framework

This type is an alias for the string type. It was introduced as a specialized string that allows differentiation between regular strings and those used for node names or process names. In the network stack, this type is also handled separately and is actively used in the Atom-cache and Atom-mapping mechanisms. When printed, values of this type are enclosed in single quotes.

This type is used as a process identifier. It is a structure containing several fields: the node name Node, a unique sequential number within the node ID, and a Creation field.

When printed as a string, this type is transformed into the following representation:

The node name is encoded into a hash using the CRC32 algorithm.

The node can generate unique identifiers. For this purpose, the gen.Node interface provides the MakeRef method. It returns a guaranteed unique value of type gen.Ref. These values are used as unique request identifiers in synchronous calls and as unique tokens when registering a gen.Event by a producer process.

When printed as a string, the value is represented as:

This type is used as a process identifier with an associated name. It is a structure containing two fields: the process name Name and the node name Node.

When printed as a string, the node name is transformed into a hash using the CRC32 algorithm, resulting in the following representation:

This type is an alias for the gen.Ref type. Values of type gen.Alias are used as temporary process identifiers, created using the CreateAlias method in the gen.Process interface, as well as identifiers for .

When printed as a string, the representation is similar to gen.Ref, but with a different prefix:

Values of this type are used when subscribing to events through the MonitorEvent and LinkEvent methods. This type is a structure similar to gen.ProcessID, containing two fields: Name and Node.

When printed as a string, the representation is similar to gen.ProcessID, but with an added prefix:

This type is an alias for the string type. It is used for the names of environment variables for nodes and processes. In Ergo Framework, environment variable names are case-insensitive.

When printed as a string, the value of this type is converted to uppercase:

To start a node, use the function ergo.StartNode(...). If the node starts successfully, it returns the gen.Node interface. This interface provides a set of functions for interacting with the node. The full list of methods can be found in the reference documentation.

This type is an interface to a process object. In Ergo Framework, this interface is embedded in the actor act.Actor, so all methods of this interface become available to objects based on act.Actor (and its derivatives).

The full list of available methods for the gen.Process interface can be found in the reference documentation.

You can access this interface using the Network method of the gen.Node interface. This interface provides a set of methods for managing the node's network stack. The full list of available methods for this interface can be found in the reference documentation.

This interface allows you to retrieve information about a remote node with which a connection has been established, as well as to spawn processes (using the Spawn and SpawnRegister methods) and start applications on it (using the ApplicationStart* methods). You can access this interface through the GetNode and Node methods of the gen.Networkinterface.

This meta-process allows you to launch a UDP server and handle incoming UDP packets as asynchronous messages of type meta.MessageUDP.

To create the meta-process, use the meta.CreateUDPServer function. This function takes meta.UDPServerOptions as an argument, which specifies the configuration options for the UDP server, such as the host, port, buffer size, and other relevant settings for managing UDP traffic:

Host, Port: Set the port number and host name for the UDP server.

Features:

Centralized service discovery

Real-time event notifications

Configuration management

TLS security support

Token-based authentication

A client library for etcd, a distributed key-value store. Provides decentralized service discovery, hierarchical configuration management with type conversion, and automatic lease management.

Features:

Distributed service discovery

Hierarchical configuration with type conversion from strings ("int:123", "float:3.14")

Automatic lease management and cleanup

Real-time cluster change notifications

TLS/authentication support

Four-level configuration priority system

Choose Saturn for centralized management with a dedicated registrar service, or etcd for a distributed approach with built-in consensus and reliability guarantees.

The additional application library for Ergo Framework contains packages with a narrow specialization or external dependencies since Ergo Framework adheres to the "zero dependencies" principle.

You can find the source code of these applications in the application library repository at https://github.com/ergo-services/application.

fmt.Printf("%s", gen.Atom("hello"))

...

'hello'pid := gen.PID{Node:"t1node@localhost", ID:1001, Creation:1685523227}

fmt.Printf("%s", pid)

...

<90A29F11.0.1001>ref := gen.Ref{

Node:"t1node@localhost",

Creation:1685524098,

ID:[3]uint32{0x1f4c2, 0x5d90, 0x0},

}

fmt.Printf("%s", ref)

...

Ref#<90A29F11.128194.23952.0>process := gen.ProcessID{Name:"example", Node:"t1node@localhost"}

fmt.Printf("%s", process)

...

<90A29F11.'example'>alias := gen.Alias{

Node:"t1node@localhost",

Creation:1685524098,

ID:[3]uint32{0x1f4c2, 0x5d90, 0x0},

}

fmt.Printf("%s", ref)

...

Alias#<90A29F11.128194.23952.0>event := gen.Event{Name:"event1", Node:"t1node@localhost"}

fmt.Printf("%s", event)

...

Event#<90A29F11:'event1'>env := gen.Env("name1")

fmt.Printf("%s", env)

...

NAME1type myActor struct {

act.Actor

}

func (a *myActor) Init(args ...any) error {

// Sending a message to itself using methods PID and Send

// that belong to the embedded gen.Process interface

a.Send(a.PID(), "hello")

}meta.MessageUDPact.PoolBufferSize: Sets the buffer size created for incoming UDP messages. For each message, a new buffer of this size is allocated. This field is ignored if BufferPool is provided.

BufferPool: Defines a pool for message buffers. The Get method should return an allocated buffer of type []byte.

If the meta-process is successfully created, the UDP server will start, and the function meta.CreateUDPServer will return gen.MetaBehavior.

Next, the created meta-process must be started. It will handle incoming UDP packets and forward them to the process for handling. To start the meta-process, use the SpawnMeta method of the gen.Process interface.

If an error occurs when starting the meta-process, the UDP server created by the meta.CreateUDPServer function must be stopped using the Terminate method of the gen.MetaBehavior interface.

Stopping the meta-process results in the shutdown of the UDP server and the closing of the socket.

To send a UDP packet, use the Send (or SendAlias) method from the gen.Process interface. The recipient should be the identifier gen.Alias of the UDP server meta-process. The message should be of type meta.MessageUDP. The meta.MessageUDP.ID field is ignored when sending (it is used only for incoming messages).

If a BufferPool was used in the UDP server options, the buffer in meta.MessageUDP.Data will be returned to the pool after the packet is sent.

For an example UDP server implementation, see the project demo at https://github.com/ergo-services/examples.

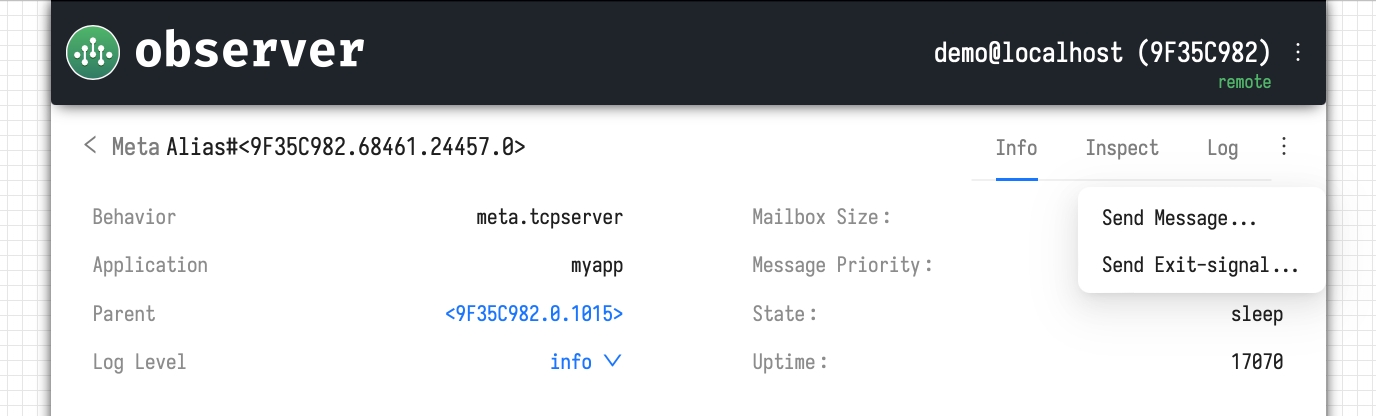

Meta-processes are designed to integrate synchronous objects into the asynchronous model of Ergo Framework. Although they share some similarities with regular processes, they have a different nature and operational characteristics:

Meta-Process Identifier: meta-process has a process identifier (of type gen.Alias) and is associated with its parent process. This allows message routing and synchronous requests to be directed to the meta-process.

Sending Messages: meta-process can send asynchronous messages to other processes or meta-processes (including remote ones) using the Send method in the gen.MetaProcess

What is a Node in Ergo Framework?

A Node is the core of the service you create using Ergo Framework. This core includes:

Process Management Subsystem: handles the starting/stopping of processes, and the registration of process names/aliases.

Message Routing Subsystem: manages the routing of asynchronous messages and synchronous requests between processes.

Pub/Sub Subsystem: powers the , functionalities, enabling distributed event handling.

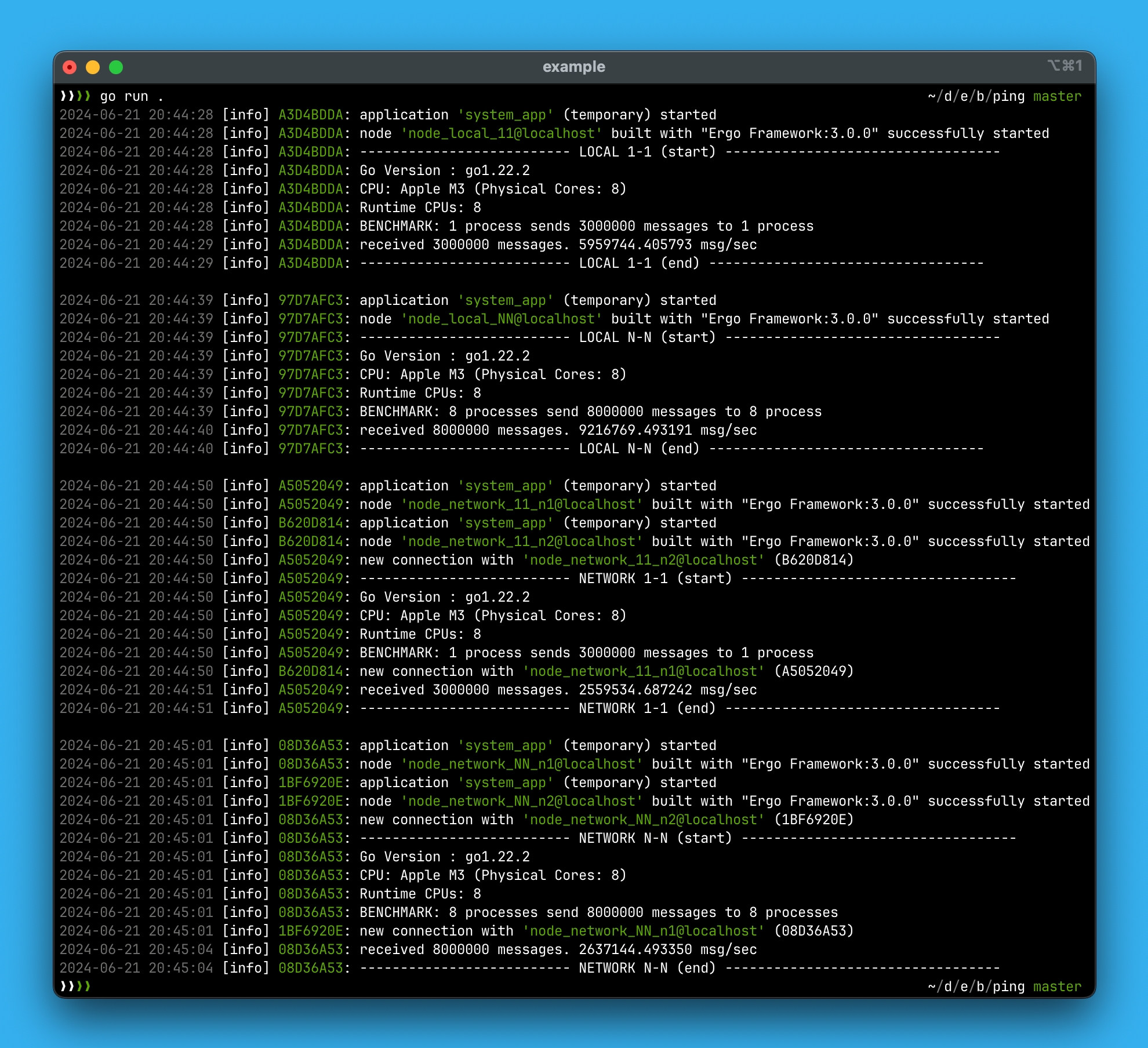

The events mechanism in Ergo Framework is built on top of the pub/sub subsystem. It allows any process to become an event producer, while other processes can subscribe to these events. This enables flexible event-driven architectures, where processes can publish and consume events across the system.

To register an event (gen.Event), you use the RegisterEvent method available in the gen.Process or gen.Node

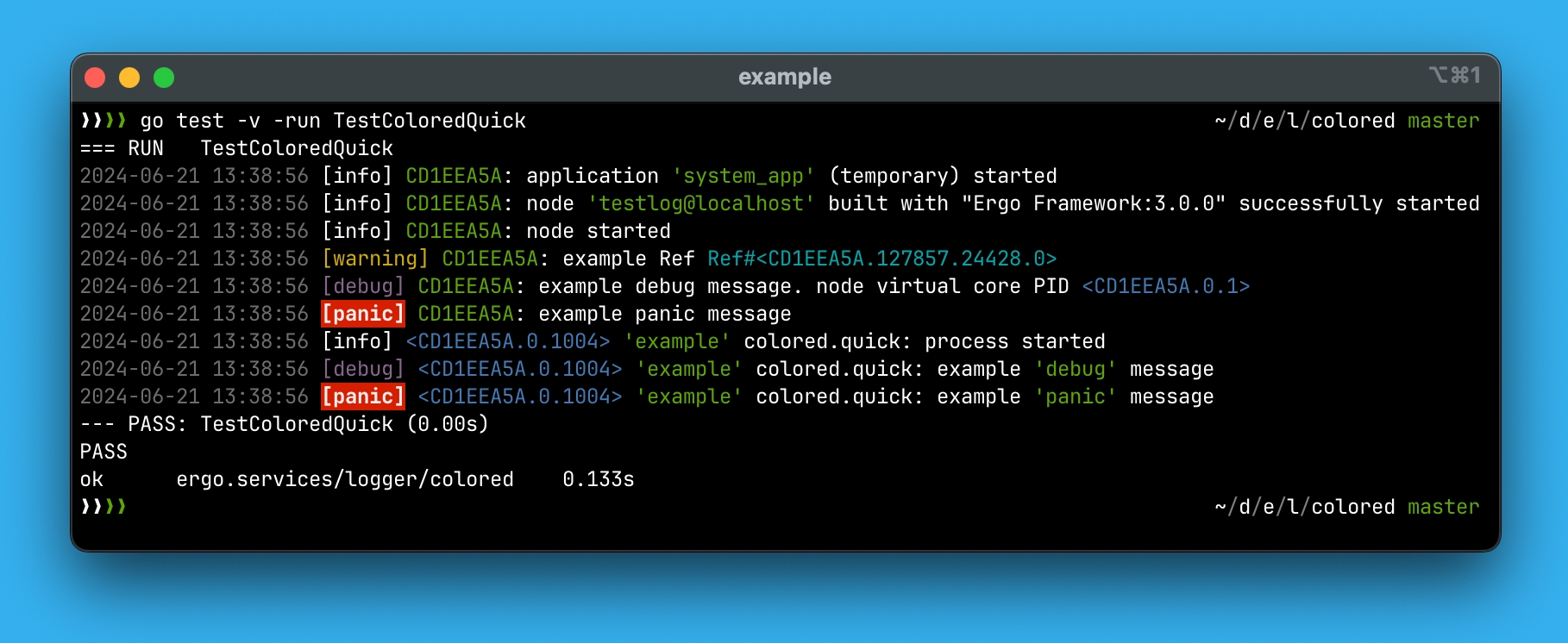

This package implements the gen.LoggerBehavior interface and provides the ability to output log messages to standard output with color highlighting.

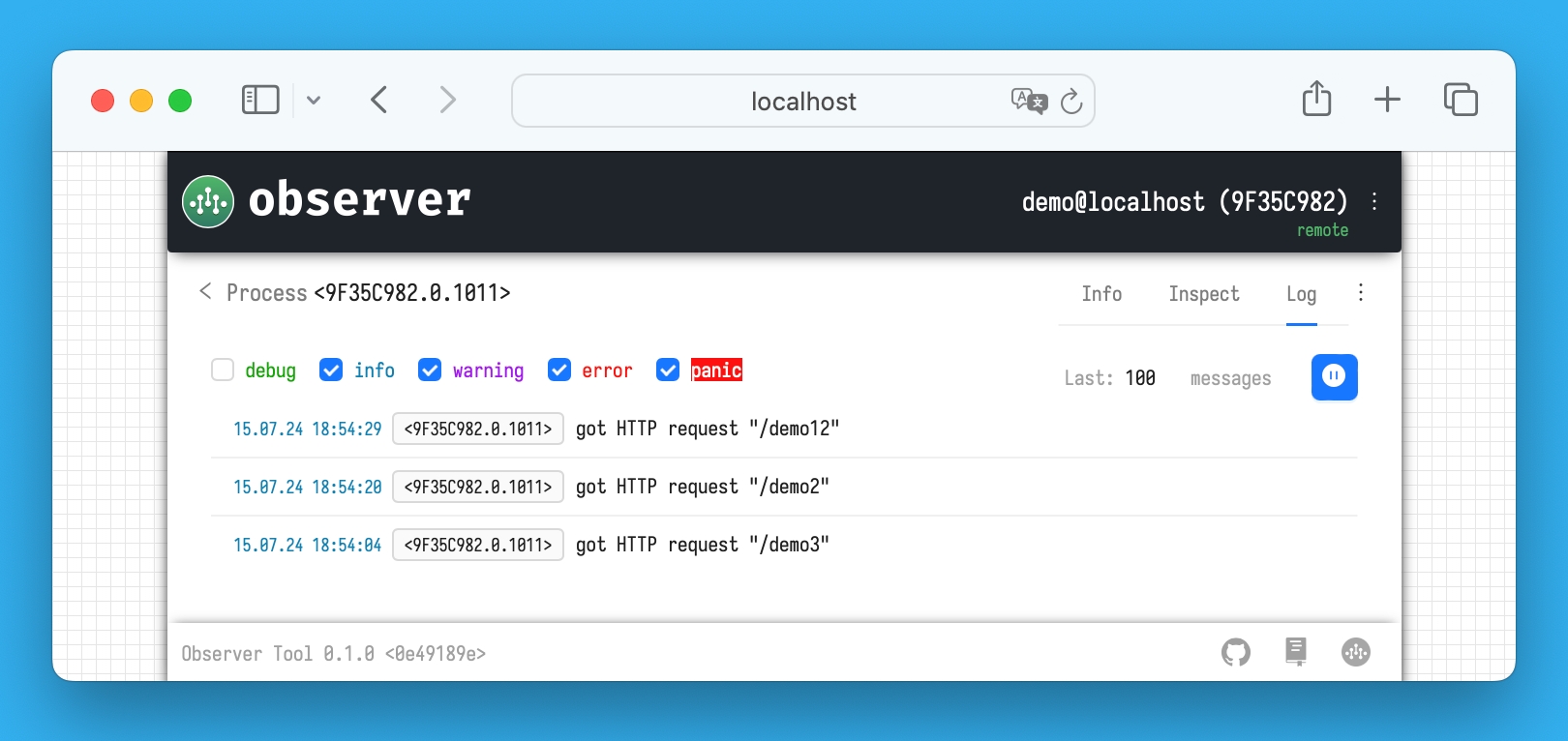

Below is a demonstration of log messages (from nodes, processes, and meta-processes) with different logging levels:

<time> <level> <log source> [process name] [process behavior]: <log message>

Registrar

The Service Discovering mechanism allows nodes to automatically find other nodes and determine connection parameters.

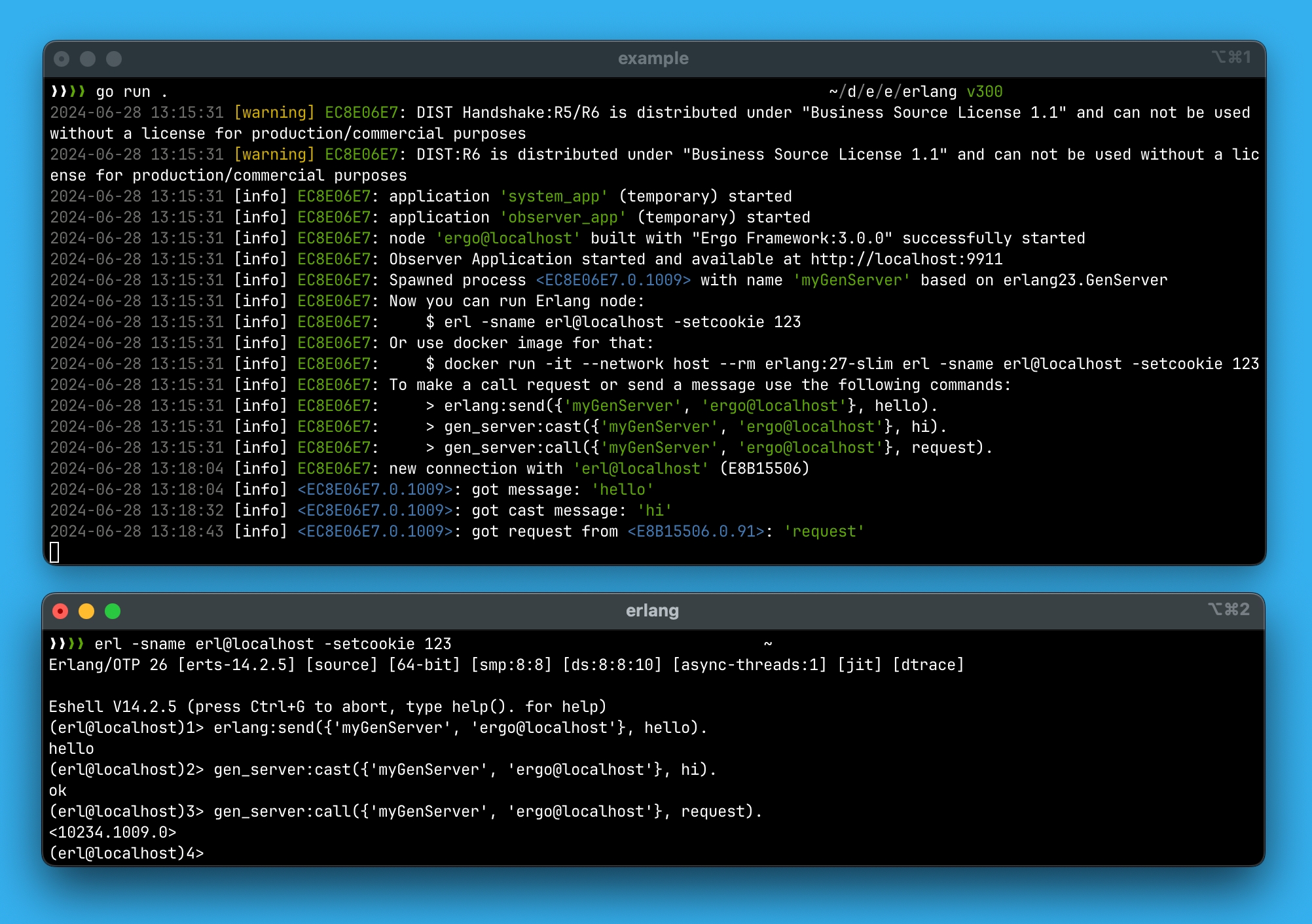

Each node has a Registrar that starts during the node's initialization and operates in either server or client mode. In Ergo Framework, the default Registrar implementation is available in the package ergo.services/ergo/net/registrar. If you are using , you need to use the corresponding client available in ergo.services/registrar/saturn. For communication with nodes, you should use the implementation from ergo.services/proto/erlang23/epmd.

The default Registrar, when running in server mode, opens a TCP socket on localhost:4499 to register other nodes on the host and a UDP socket on *:4499 to handle resolve requests from other nodes, including remote ones. If the Registrar fails to start in server mode, it switches to client mode and registers with the

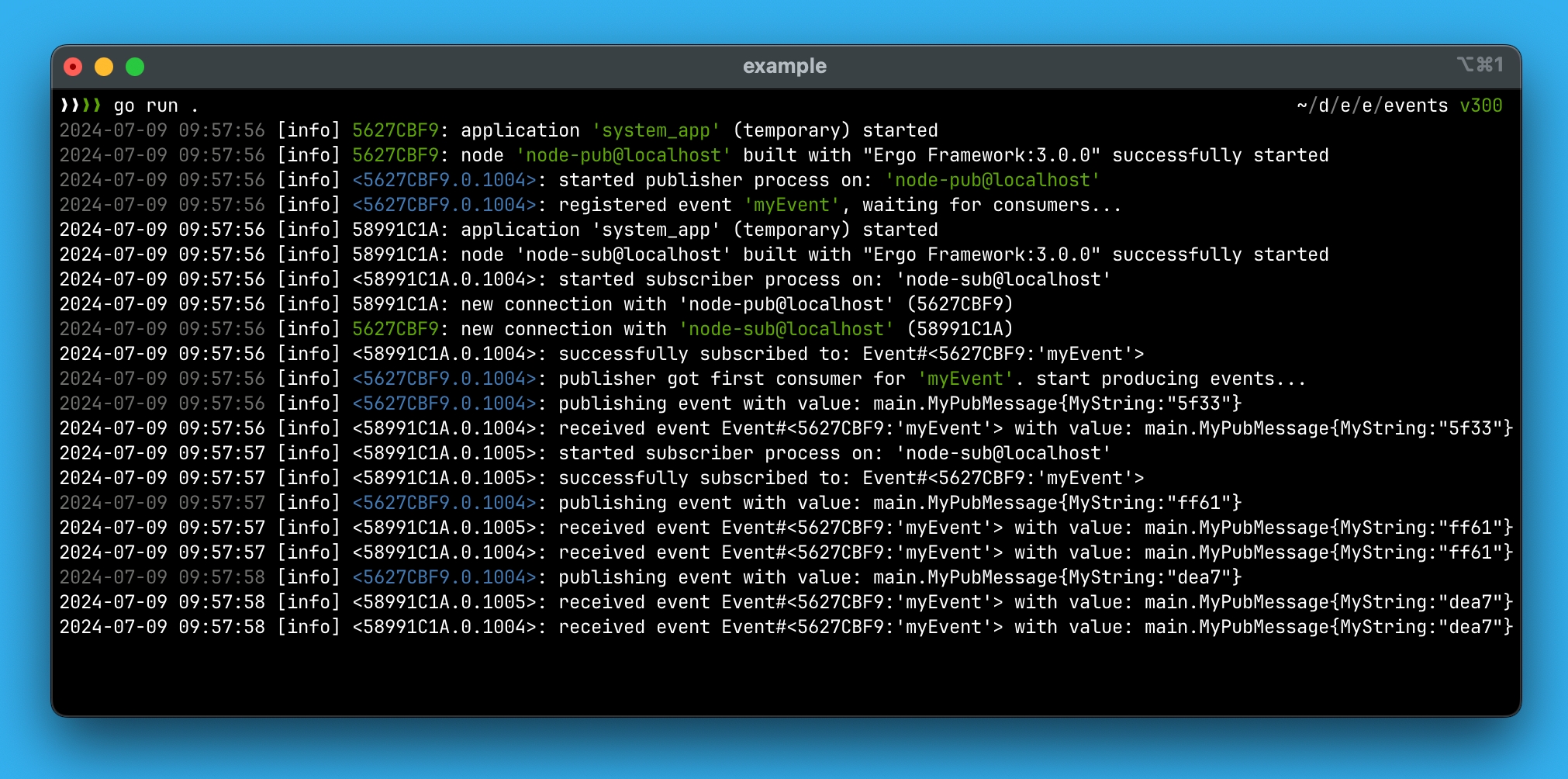

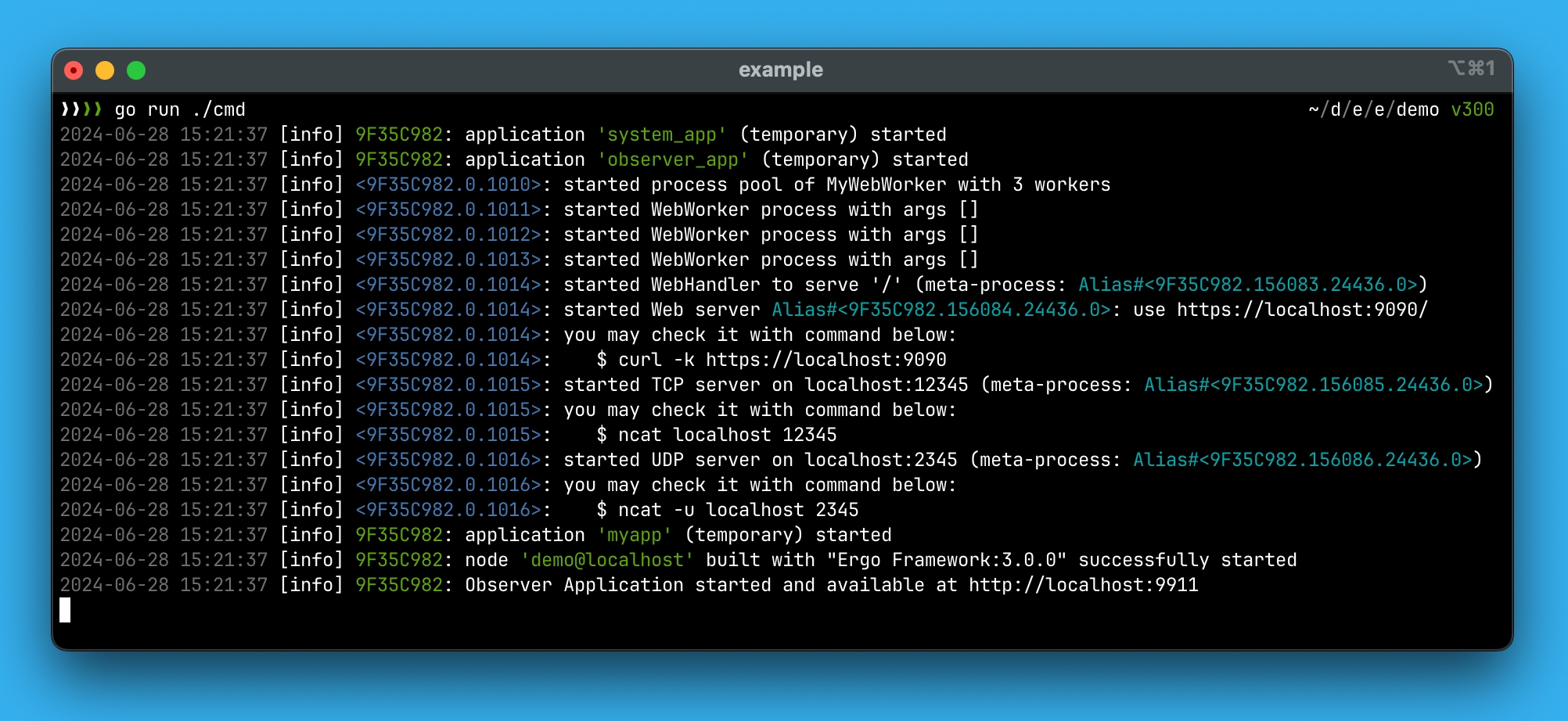

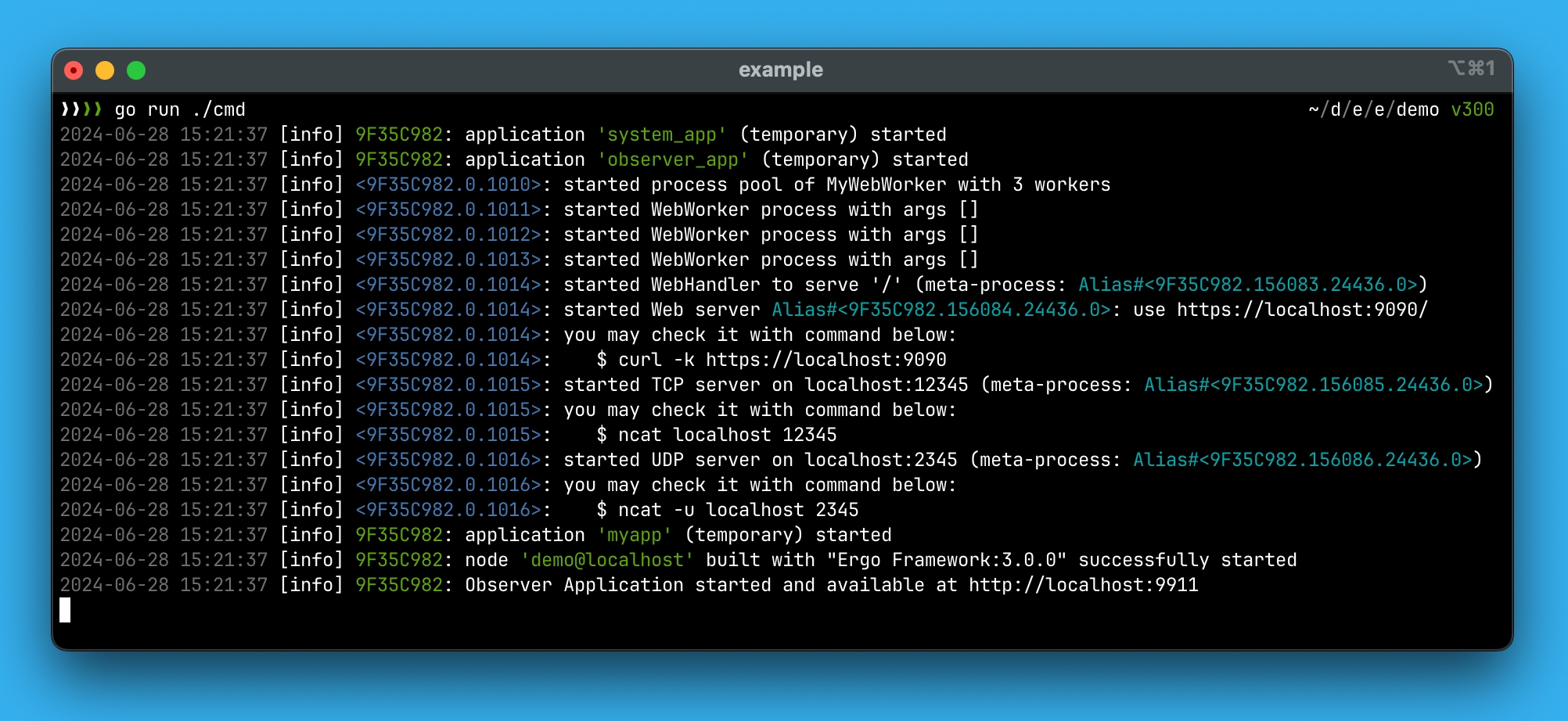

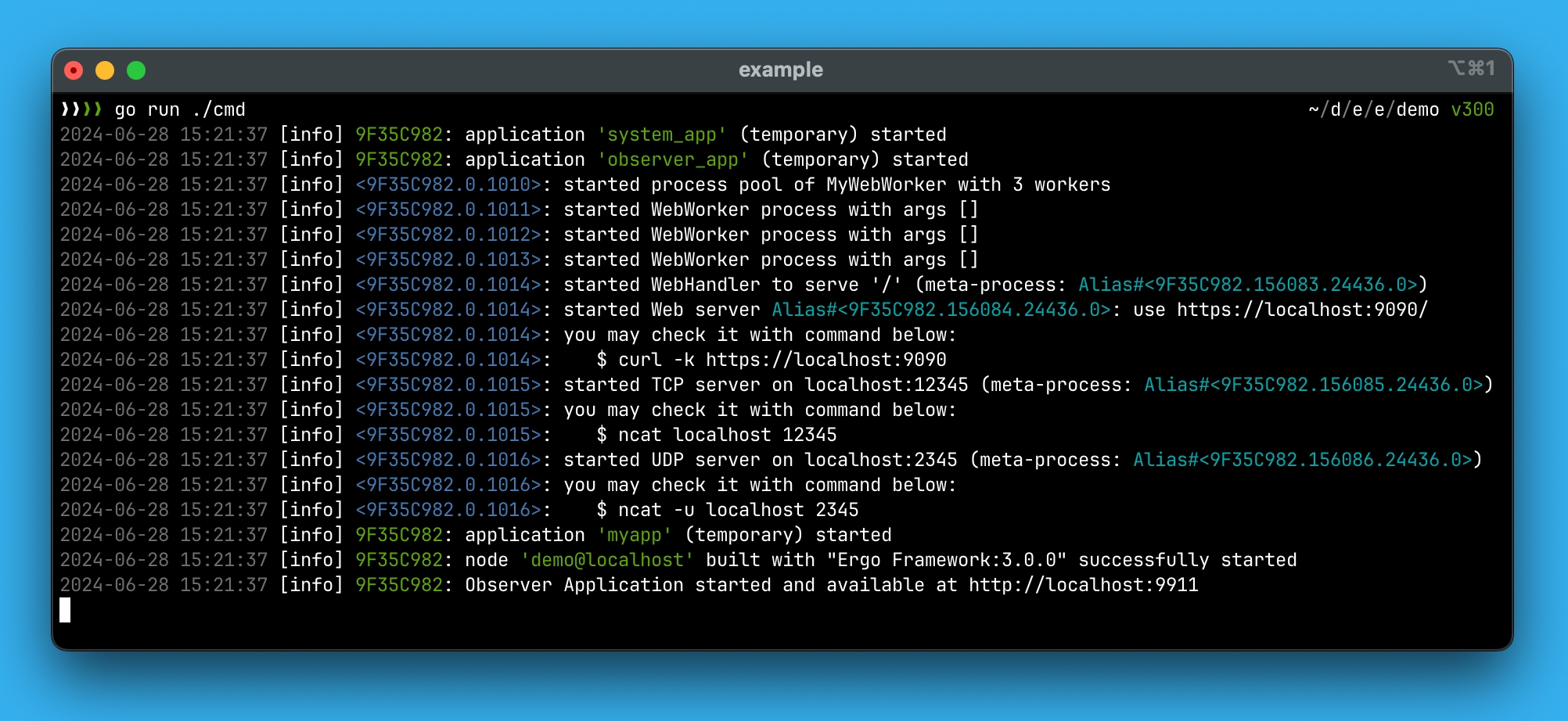

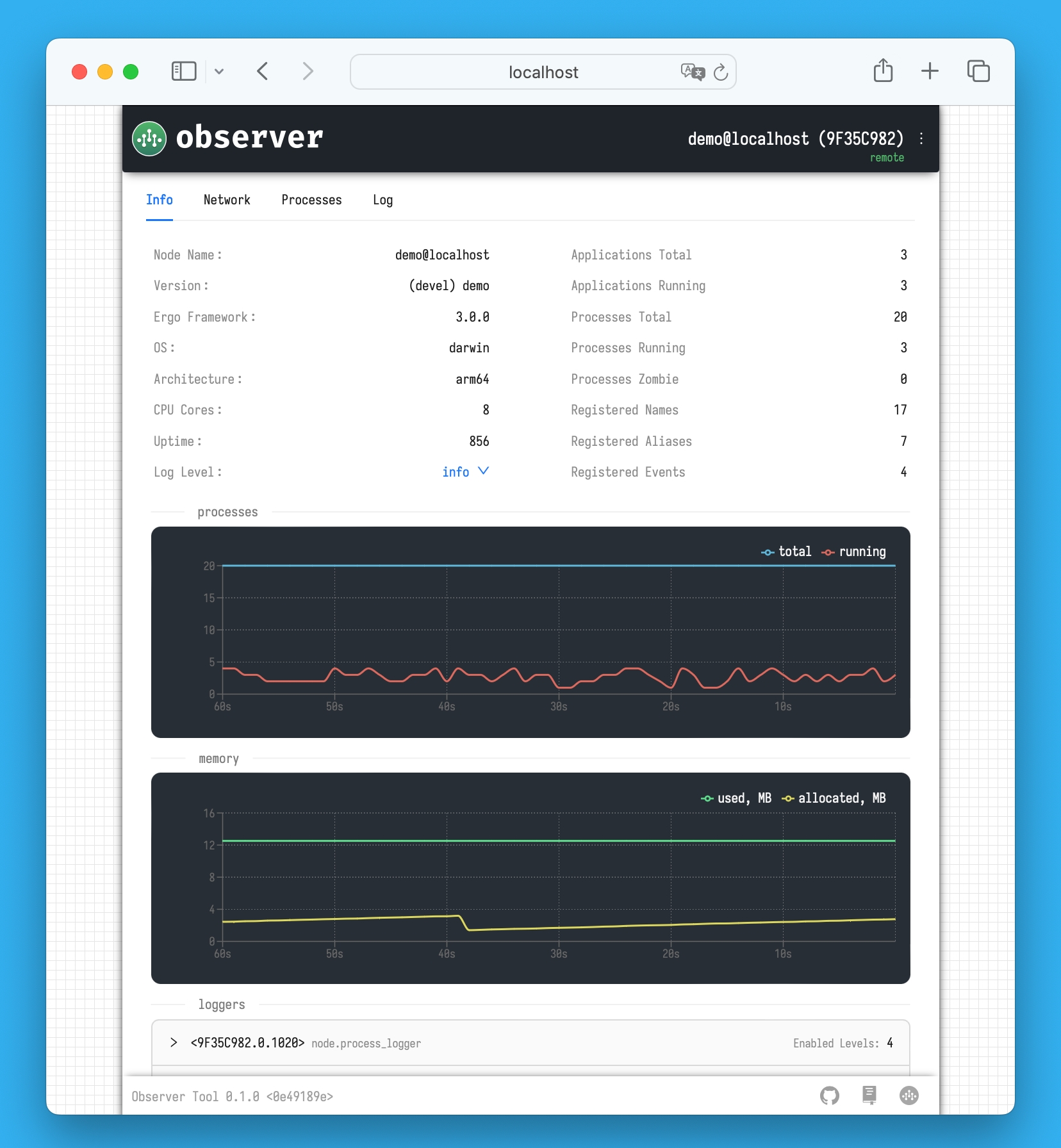

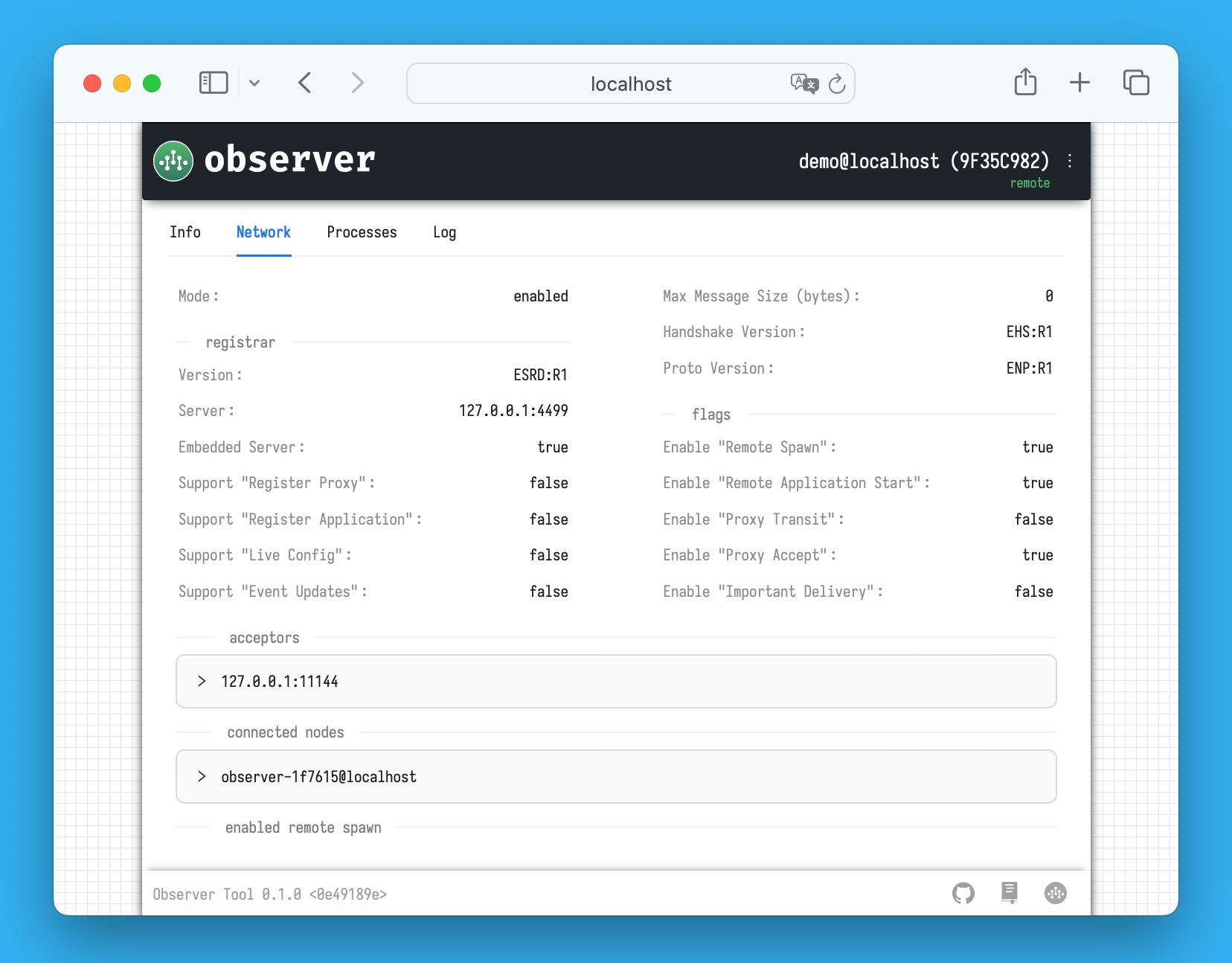

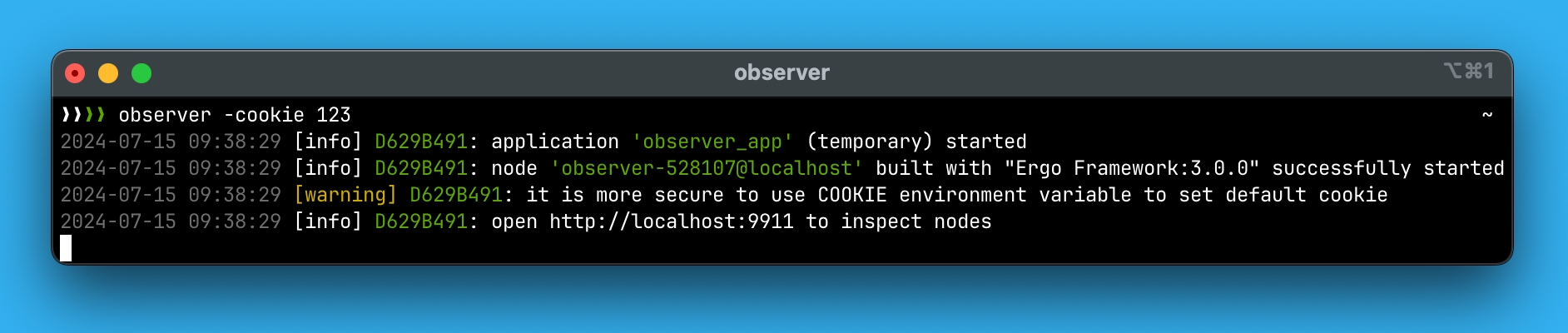

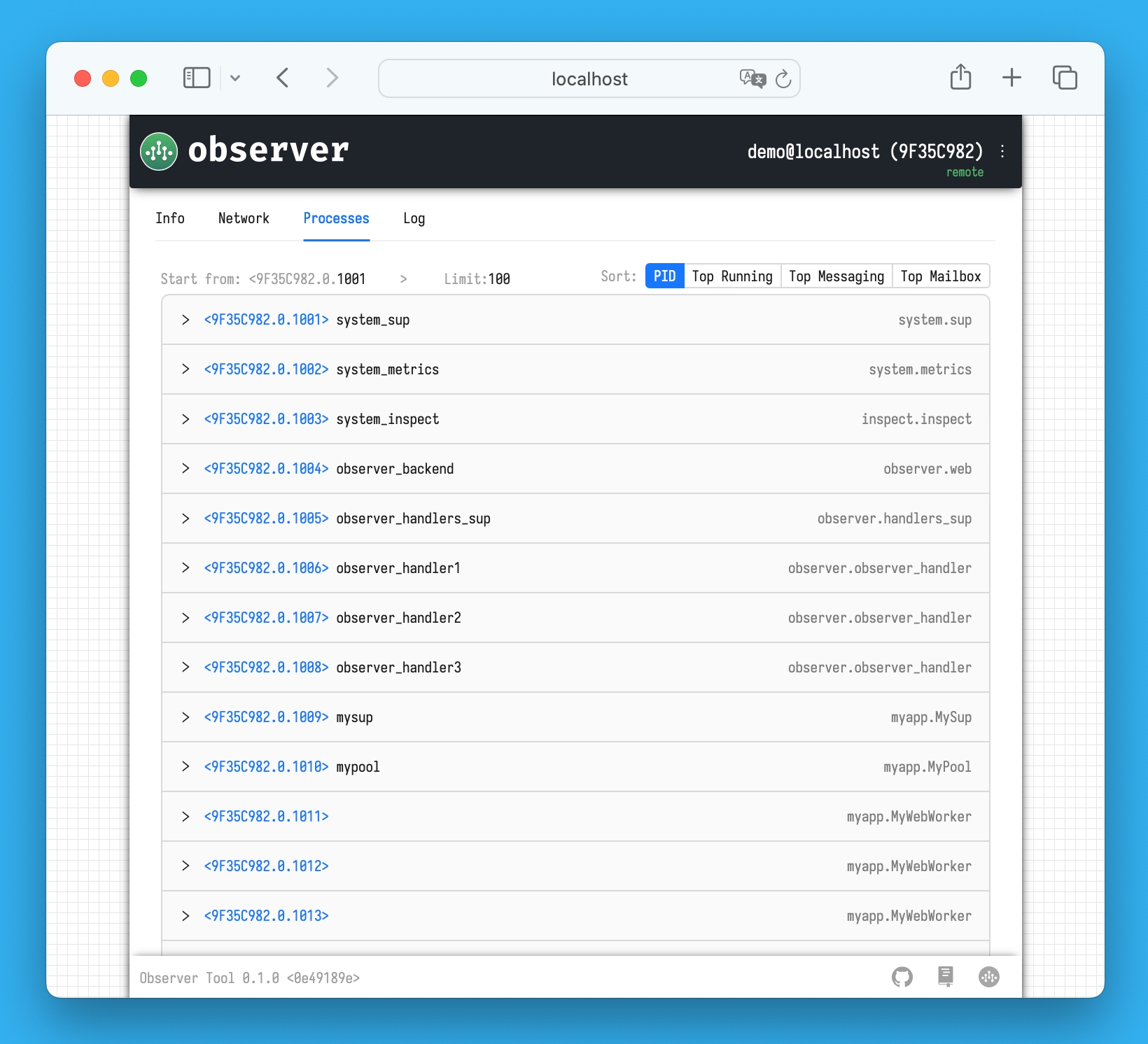

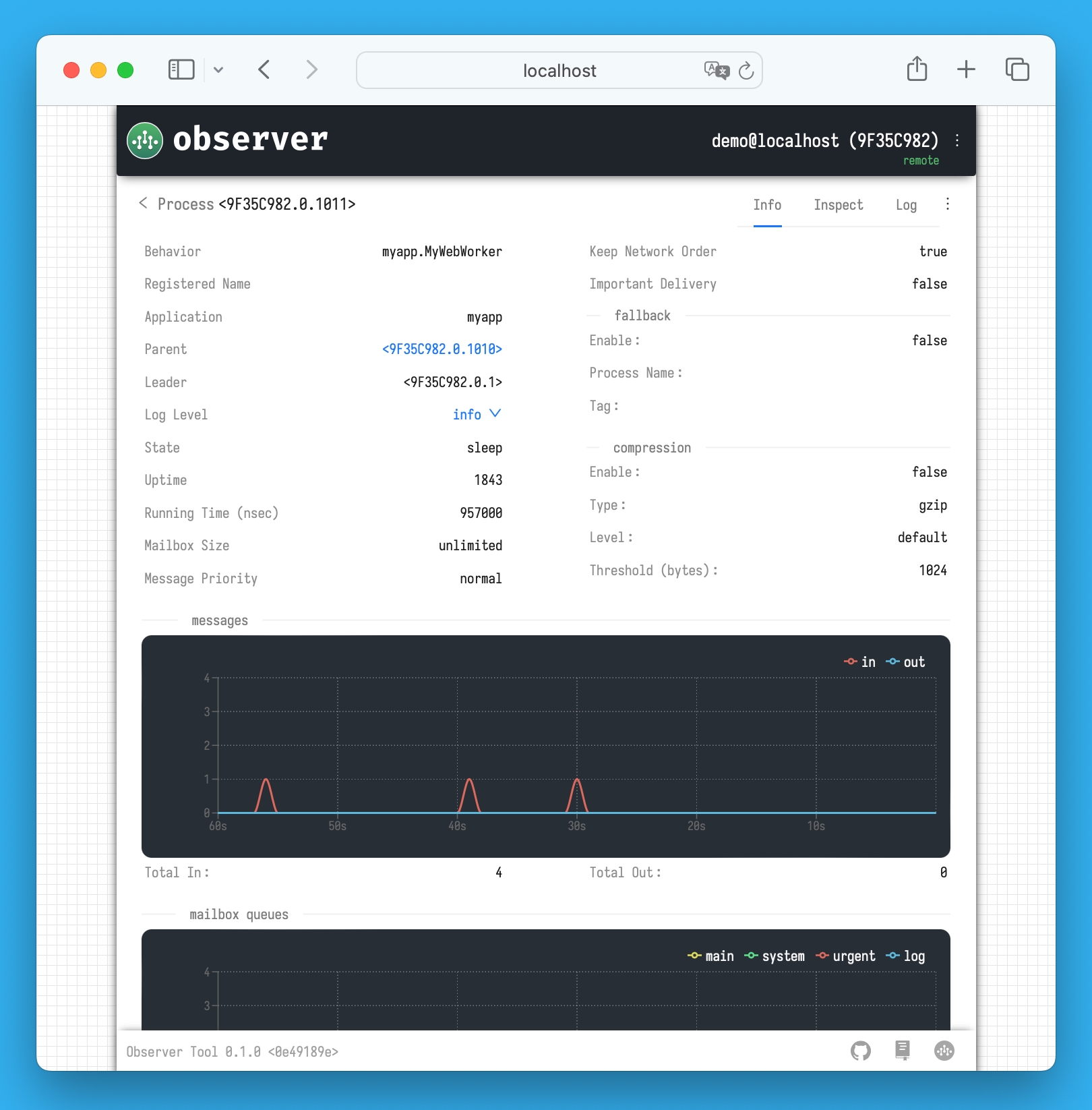

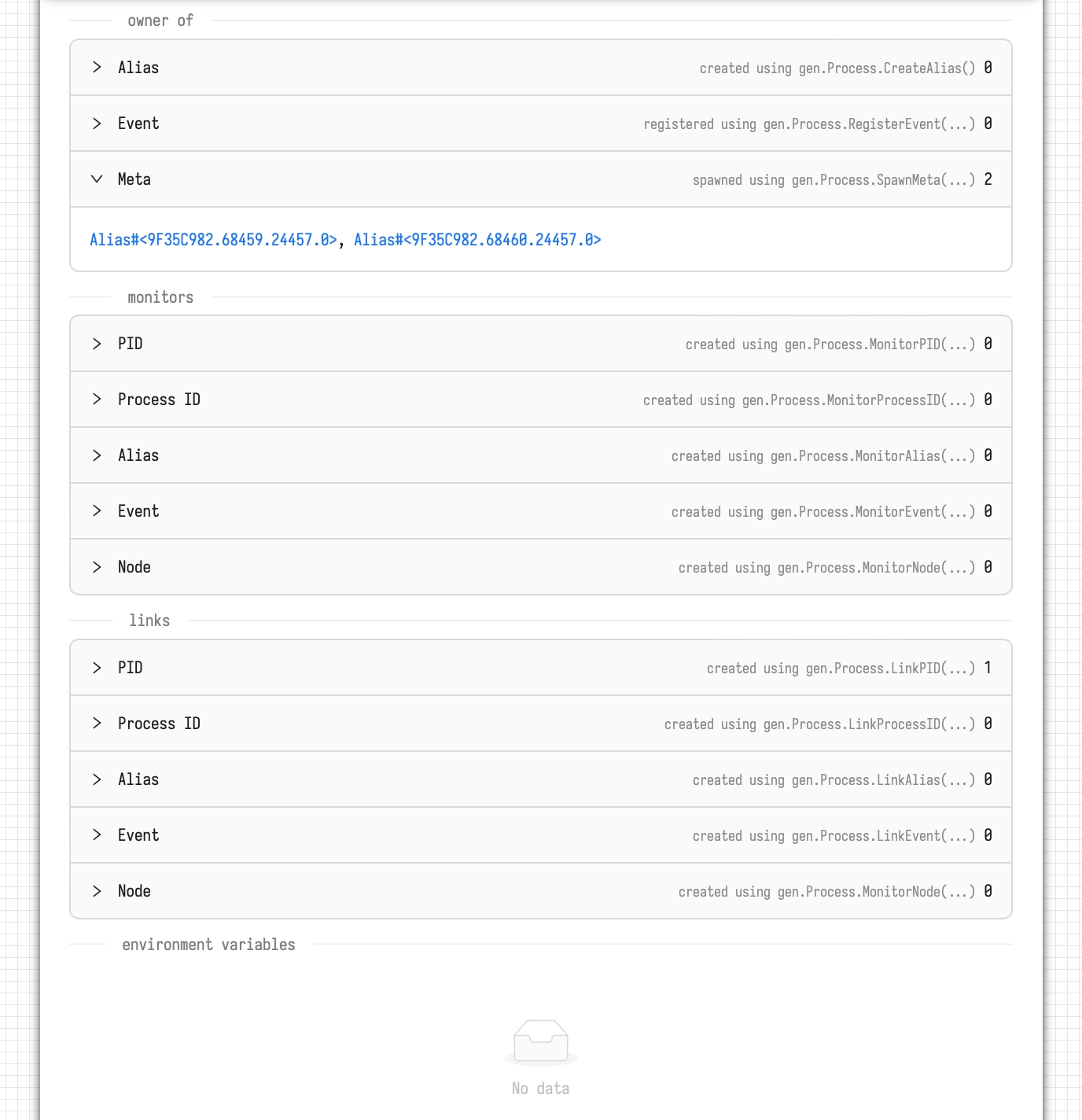

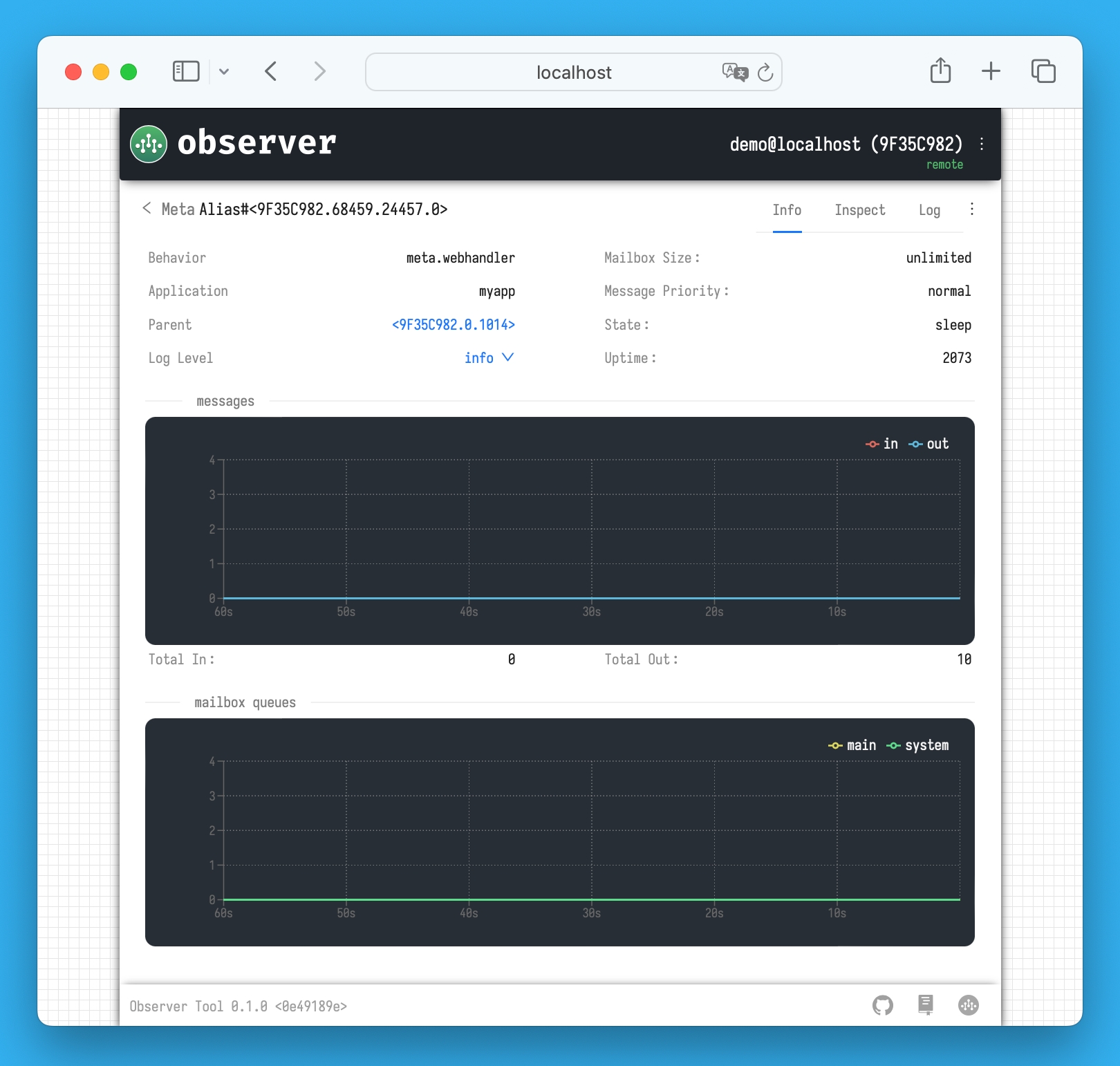

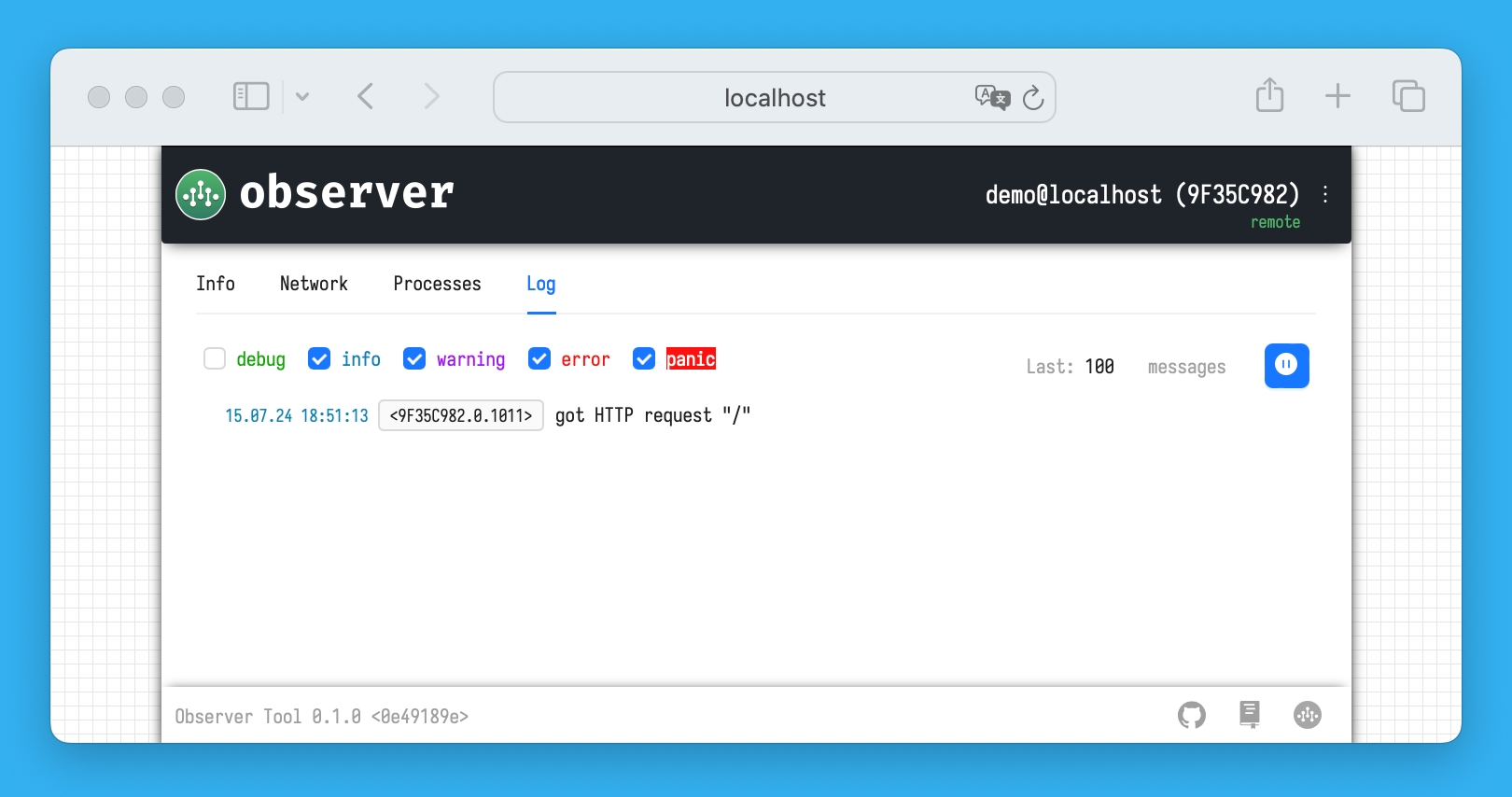

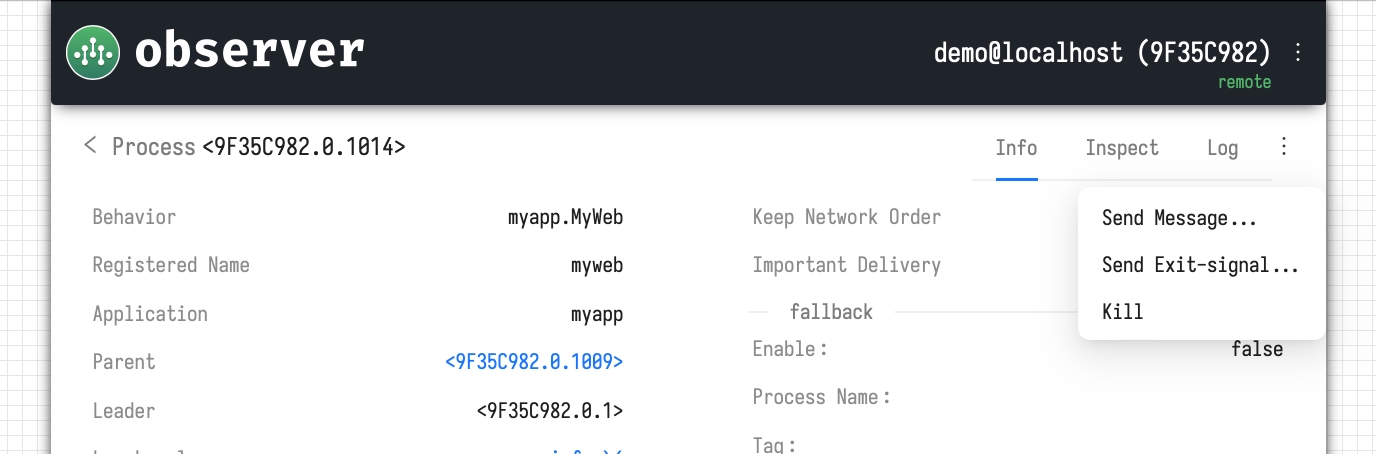

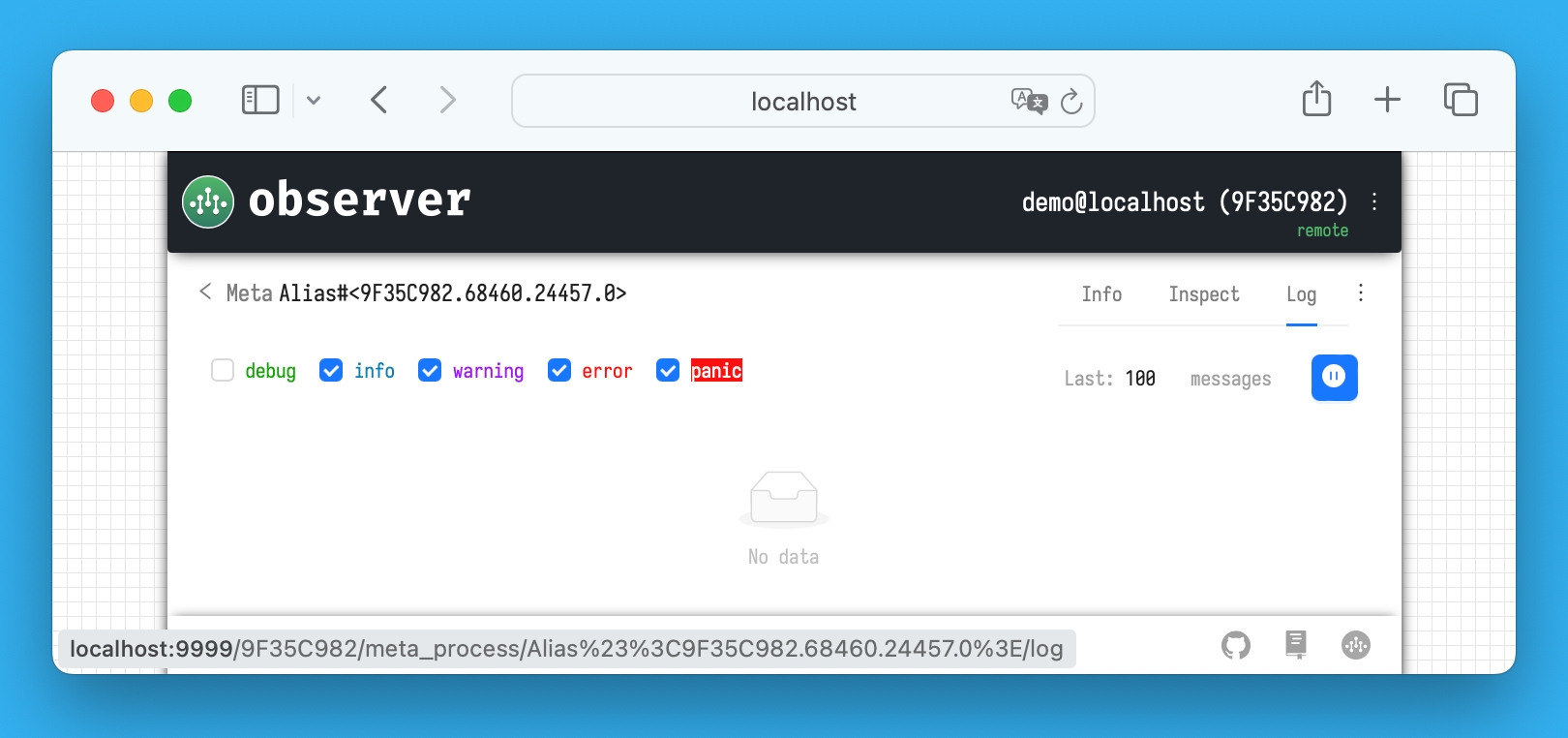

The Application Observer provides a convenient web interface to view node status, network activity, and running processes in the node built with Ergo Framework. Additionally, it allows you to inspect the internal state of processes or meta-processes. The application is can also be used as a standalone tool Observer. For more details, see the section . You can add the Observer application to your node during startup by including it in the node's startup options:

The function observer.CreateApp takes observer.Options as an argument, allowing you to configure the Observer application. You can set:

Port: The port number for the web server (default: 9911 if not specified).

The network stack in Ergo Framework includes the capability to spawn processes on remote nodes. Nodes can control access to this feature or disable it entirely by using the EnableRemoteSpawn flag in gen.NetworkFlags. By default, this feature is enabled.

To manage the ability of remote nodes to spawn processes, the gen.Network interface provides two methods:

The name

In addition to the ability to spawn processes on remote nodes, Ergo Framework also allows you to start applications remotely. Similar to process spawning, access to this functionality is controlled by the EnableRemoteApplicationStartflag in gen.NetworkFlags. By default, this flag is enabled.

To allow an application to be started from a remote node, it must be registered in the network stack. The gen.Networkinterface provides the following methods for controlling this functionality:

The name

type UDPServerOptions struct {

Host string

Port uint16

Process gen.Atom

BufferSize int

BufferPool *sync.Pool

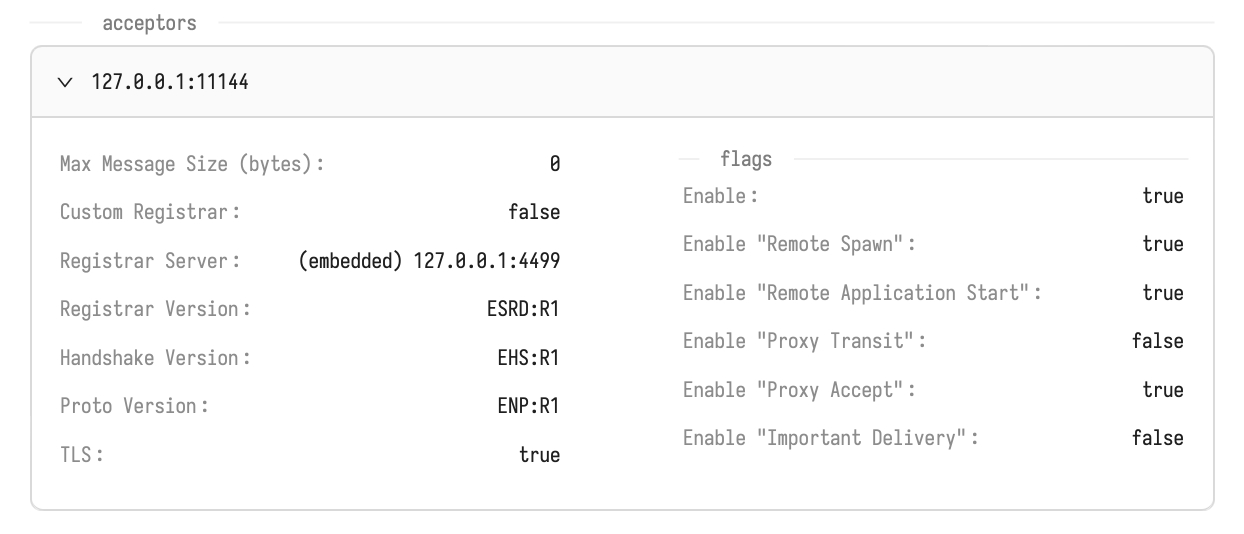

}When a node starts, it establishes a TCP connection with the Registrar server, which remains open until the node terminates. During registration, your node communicates its name, information about all acceptors, and their parameters (port number, Handshake/Proto version, TLS flag) to the Registrar. If using Saturn, additional information about running applications on the node is also communicated (this functionality is not supported by the built-in Registrar).

When a node running the Registrar in server mode terminates, other nodes on the same host automatically attempt to switch to server mode. The node that first successfully opens the TCP socket on localhost:4499 will switch to server mode, while the remaining nodes on the host will continue to operate in client mode and automatically register with the new Registrar in server node.

Node names must be unique within the same host. If a node attempts to register with a name that has already been registered by another node, the Registrar will return the error gen.ErrTaken.

When a node attempts to establish a network connection with another node, it first sends a resolve request (a UDP packet) to the Registrar, using the host name of the target node and port 4499. In response to this request, the Registrar returns a list of acceptors along with their parameters needed to establish the connection:

The port number where the acceptor is running.

The version of the Handshake protocol.

The version of the Proto protocol.

The TLS flag, which indicates whether TLS encryption is required for this connection.

Using these parameters, the node then establishes the network connection to the target node.

By default, the built-in network stack of Ergo Framework (ergo.services/ergo/net) is used for both incoming and outgoing connections. However, the node can work with multiple network stacks simultaneously (e.g., for compatibility with Erlang's network stack).

You can create multiple acceptors with different sets of parameters for handling incoming connections. For outgoing connections, you can manage the connection parameters using the Static Routes functionality, which allows you to control how connections are established with various nodes.

Host: The interface name (default: localhost).

LogLevel: The logging level for the Observer application (useful for debugging). The default is gen.LogLevelInfo

import (

"ergo.services/ergo"

"ergo.services/application/observer"

"ergo.services/ergo/gen"

)

func main() {

opt := gen.NodeOptions{

Applications: []gen.ApplicationBehavior {

observer.CreateApp(observer.Options{}),

}

}

node, err := ergo.StartNode("example@localhost", opt)

if err != nil {

panic(err)

}

node.Wait()

}gen.PIDHandling Messages: meta-processes can handle asynchronous messages from other processes or meta-processes via the HandleMessage callback method of the gen.MetaBehavior interface.

Handling Synchronous Requests: can handle synchronous requests from other processes (including remote ones) using the HandleCall callback method of the gen.MetaBehavior interface.

Spawning Other Meta-Processes: can spawn other meta-processes using the Spawn method in the gen.MetaProcess interface.

Linking and Monitoring: other processes (including remote ones) can create a link or monitor with the meta-process using the LinkAlias and MonitorAlias methods of the gen.Process interface, referencing the meta-process’s gen.Alias.

Despite these similarities with regular processes, meta-processes have distinct characteristics:

Concurrency: meta-process operates with two goroutines. The main goroutine starts when the meta-process is created and handles operations on the synchronous object. The termination of this goroutine leads to the termination of the meta-process. The auxiliary goroutine is launched to handle incoming messages from other processes or meta-processes, and it shuts down when there are no more messages in the meta-process’s mailbox. Therefore, access to the meta-process object’s data is concurrent, as it can be accessed by both goroutines simultaneously. This concurrency must be managed carefully when implementing your own meta-process.

Parent Process Dependency: a meta-process can only be started by a process (or another meta-process) using the SpawnMeta method in the gen.Process interface (or the Spawn method in the gen.MetaProcessinterface).

No Own Environment Variables: meta-processes do not have their own environment variables. The Env and EnvList methods of the gen.MetaProcess interface return the environment variables of the parent process.

Limitations: meta-processes cannot make synchronous requests, create links, or establish monitors themselves.

Termination with Parent: when the parent process terminates, the meta-process is also automatically terminated.

Meta-processes offer a way to integrate synchronous objects into the asynchronous system while maintaining compatibility with the process communication model. However, due to their concurrent nature and relationship with the parent process, they require careful handling in certain scenarios.

Ergo Framework provides several ready-to-use implementations of meta-processes:

meta.TCP: allows you to launch a TCP server or create a TCP connection.

meta.UDP: for launching a UDP server.

meta.Web: for launching an HTTP server and creating an http.Handler based on a meta-process.

WebSocket Meta-Process: for working with WebSockets, a WebSocket meta-process is available. Since its implementation has a dependency on github.com/gorilla/websocket, it is provided as a separate package: ergo.services/meta/websocket.

These meta-processes offer pre-built solutions for integrating common networking and web functionality into your application.

To start a meta-process, the gen.Process interface provides the SpawnMeta method:

If a meta-process needs to spawn other meta-processes, the gen.MetaProcess interface implements the Spawn method:

In the gen.MetaOptions options, you can configure:

MailboxSize: size of the meta-process’s mailbox for incoming messages.

SendPriority: priority for messages sent by the meta-process.

LogLevel: the logging level for the meta-process.

Upon successful startup, these methods return the meta-process identifier, gen.Alias. This identifier can be used to:

Send asynchronous messages via the SendAlias method in the gen.Process interface.

Make synchronous requests using the CallAlias method in the gen.Process interface.

Create links or monitors with the meta-process using the LinkAlias or MonitorAlias methods in the gen.Processinterface.

To stop a meta-process, you can send it an exit signal using the SendExitMeta method of the gen.Process interface. When the meta-process receives this signal, it triggers the callback method Terminate from the gen.MetaBehaviorinterface.

Additionally, a meta-process will be terminated in the following cases:

The parent process has terminated.

The main goroutine of the meta-process has finished (i.e., the Start method of the gen.MetaBehavior interface has completed its work).

The HandleMessage (or HandleCall) callback method returned an error while processing an asynchronous message (or a synchronous request).

A panic occurred in any method of the gen.MetaBehavior interface.

To create a custom meta-process in Ergo Framework, you need to implement the gen.MetaBehavior interface. This interface defines the behavior of the meta-process and includes the following key methods:

Start: this method is called when the meta-process starts. It is responsible for initializing the meta-process and running the main logic. The Start method runs in the main goroutine of the meta-process, and when it finishes, the meta-process is terminated.

HandleMessage: this callback is invoked to handle incoming asynchronous messages from other processes or meta-processes. It runs in the auxiliary goroutine that processes the meta-process’s mailbox.

HandleCall: this method processes synchronous requests (calls) from other processes or meta-processes. It is also part of the auxiliary goroutine that processes incoming messages.

Terminate: called when the meta-process receives an exit signal or when its parent process terminates. It is responsible for cleanup and finalizing the meta-process before it is removed.

Here is an example of implementing a custom meta-process in Ergo Framework - demonstrates how to create a meta-process that reads data from a network connection and sends it to its parent process:

In this example, we embed the gen.MetaProcess interface within the myMeta struct. This allows us to use the methods of gen.MetaProcess (like Send, Parent, and Log) inside the callback methods defined by gen.MetaBehavior.

The HandleMessage method processes asynchronous messages sent to the meta-process. It checks the type of the message and takes appropriate actions, such as writing data to the connection. If the method returns an error, it causes the termination of the meta-process, triggering the Terminate method to handle the cleanup.

The Terminate method ensures that resources like the network connection are properly released when the meta-process ends. If the meta-process encounters an error in HandleMessage or HandleCall, or if any other termination condition occurs, the Terminate method will be called to finalize the shutdown process.

List of available methods in the gen.MetaProcess Interface:

Network Stack: provides service discovery and network transparency, facilitating seamless communication between nodes.

Logging Subsystem

To start a node, the first step is to define its name. The name consists of two parts: <name>@<hostname>, where the hostname determines on which network interface the port for incoming connections will be opened.

The node's name must be unique on the host. This means that two nodes with the same name cannot be running on the same host.

Below is an example code for starting a node:

If the gen.NodeOptions.Applications option includes applications when starting the node, they will be automatically loaded and started. However, if any application fails to start, the ergo.StartNode(...) function will return an error, and the node will shut down. Upon a successful start, this function returns the gen.Node interface.

You can also specify environment variables for the node using the Env option in gen.NodeOptions. All processes started on this node will inherit these variables. The gen.Node interface provides the ability to manage environment variables through methods like EnvList(), SetEnv(...), and Env(...). However, changes to environment variables will only affect newly started processes. Environment variable names are case-insensitive.

Additionally, the gen.Node interface provides the following methods:

Starting/Stopping Processes: methods like Spawn(...), SpawnRegister(...) allow you to start processes, while Kill(...) and SendExit(...) are used to stop them.

Retrieving Process Information: use the ProcessInfo(...) method to get information about a process, and the MetaInfo(...) method to retrieve information about meta-processes.

Managing the Node's Network Stack: access the gen.Network interface through the Network() method to manage the node's network operations.

Getting Node Uptime: the Uptime() method provides the node's uptime in seconds.

General Node Information: use the Info() method to retrieve general information about the node.

Sending Asynchronous Messages: the Send(...) method allows you to send asynchronous messages to a process.

The full list of available methods for the gen.Node interface can be found in the reference documentation.

The mechanism for starting and stopping processes is provided by the node's core. Each process is assigned a unique identifier, gen.PID, which facilitates message routing.

The node also allows you to register a name associated with a process. You can register such a name at the time of process startup by using the SpawnRegister method and specifying the desired name. Alternatively, you can use the RegisterName method from the gen.Node or gen.Process interfaces to assign a name to an already running process.

A process can only have one associated name. If you want to change a process's name, you must first unregister the existing name using the UnregisterName method from the gen.Node or gen.Process interfaces. Upon success, this method returns the gen.PID of the process previously associated with that name.

A process can be terminated by the node by sending it an exit signal. This is done via the SendExit method in the gen.Node interface. If necessary, the node can also forcefully stop a process using the Kill method provided by the gen.Node interface.

One of the key responsibilities of a node is message routing between processes. This routing is transparent to both the sender and the receiver processes. If the recipient process is located on a remote node, the node will automatically attempt to establish a network connection to the remote node and deliver the message to the recipient. This is the network transparency provided by the Ergo Framework—you don't need to worry about how to send the message or how to encode it, as the node handles all of this automatically and transparently for you.

Message routing is not limited to the gen.PID process identifier. A message can also be addressed using:

Local Process Name (gen.Atom): messages can be sent to a local process by referencing its registered name.

gen.Alias: this is a unique identifier that can be created by the process itself. It is often used for meta-processes or as a process temporary identifier. You can read more about this feature in the Process section.

gen.ProcessID: This is used for sending messages by the recipient process's name. The structure contains two fields—Name and Node. It is a convenient way to send a message to a process on a remote node when you do not know the gen.PID or gen.Alias of the remote process.

Through these flexible options, the Ergo Framework provides a robust and seamless message routing system, simplifying communication between processes whether they are local or remote.

The core of the node implements a publisher/subscriber mechanism, which serves as the foundation for process linking, monitoring, and connections with other nodes.

Monitoring Functionality: this allows any process to monitor other processes or nodes. In the event that a monitored process stops or the connection to a node is lost, the process that created the monitor will receive a gen.MessageDownPID or gen.MessageDownNode message, respectively.

Linking Functionality: similar to monitoring, linking differs in that when a linked process terminates or the connection to a node is lost, the process that created the link will receive an exit signal, causing it to stop as well.

Event System: the publisher/subscriber mechanism is also used in the events functionality. It allows any process to register its own event type and act as a producer of those events, while other processes can subscribe to those events.

Thanks to network transparency, the pub/sub subsystem works not only for local processes but also for remote ones.

You can read more about these features in the sections on Links and Monitors and Events.

The process of sending a message to a remote node involves several steps:

Connection Establishment: if a connection to the remote node has not yet been created, the node queries the registrar on the host where the target node (owner of the recipient gen.PID, gen.ProcessID, or gen.Alias) is running. The registrar returns the port number on which the target node accepts incoming connections. The connection to this node is then established. This connection remains active until one of the nodes explicitly closes it by calling Disconnect from the gen.RemoteNode interface.

Message Encoding: the message is encoded into the binary EDF format.

Data Compression: if compression was enabled for the sender process, the binary data is compressed.

Message Transmission: the message is sent over the network using the ENP protocol.

Message Decoding and Delivery: remote node automatically decodes the received message and ensures its delivery to the recipient process's mailbox.

For more detailed information on network interactions, refer to the Network Stack section.

You can stop a node using the Stop() or StopForce() methods from the gen.Node interface.

Stop: all processes will be sent an exit signal with the reason gen.TerminateReasonShutdown from the parent process, and the node will wait for all processes to terminate. Once all processes have stopped, the node's network stack and the node itself will be shut down.

StopForce: in the case of a forced shutdown, all processes will be terminated using the Kill method from the gen.Node interface, without waiting for their graceful shutdown.

Using EnableSpawn with the nodes argument creates an access list, allowing only the specified remote nodes to spawn processes with the registered factory. Conversely, using DisableSpawn with the nodes argument removes the specified nodes from the access list. If the list becomes empty, access is open to all nodes. To fully disable access to the process factory, use DisableSpawn without the nodes argument.

To spawn a process on a remote node, you need to use the gen.RemoteNode interface, which can be obtained using the GetNode or Node methods of the gen.Network interface. The gen.RemoteNode interface provides two methods:

For the name argument, you must use the name under which the process factory is registered on the remote node.

Just like with local process spawning, the process started on the remote node will inherit certain parameters from its parent. In this case, the parent is the virtual identifier of the node that sent the spawn request. The process will also inherit the logging level.

To inherit environment variables, you need to enable the ExposeEnvRemoteSpawn option in gen.NodeOptions.Securitywhen starting your node. The environment variable values must be encodable and decodable using the EDF format. If you are using a custom type as the value of an environment variable, that type must be registered (see the Network Transparency section).

Upon success, these methods return the process identifier (gen.PID) of the process that was successfully started on the remote node.

You can also spawn a process on a remote node using the methods provided by the gen.Process interface:

When using these methods, the process started on the remote node will inherit parameters from the parent process, including the application name, logging level, and environment variables (if the ExposeEnvRemoteSpawn flag in gen.NodeOptions.Security was enabled).

Note that linking options in gen.ProcessOptions will be ignored when spawning processes remotely.

nodesUsing the EnableApplicationStart method with the nodes argument creates an access list of remote nodes that are permitted to start the specified application.

When using the DisableApplicationStart method with the nodes argument, it removes the specified nodes from the access list. If the access list becomes empty after removing nodes, access is opened to all remote nodes. To fully disable access to starting the application, use the DisableApplicationStart method without the nodes argument.

To start an application on a remote node, use the ApplicationStart method of the gen.RemoteNode interface (which you can access via the GetNode or Node methods of the gen.Network interface). This interface also provides additional methods to control the application's startup mode:

When an application is started remotely, the Parent property of the application is assigned the name of the node that initiated the startup. You can retrieve information about the application and its status using the ApplicationInfo method of the gen.Node interface.

EnableApplicationStart(name gen.Atom, nodes ...gen.Atom) error

DisableApplicationStart(name gen.Atom, nodes ...gen.Atom) errorSpawnMeta(behavior gen.MetaBehavior, options genMetaOptions) (gen.Alias, error)Spawn(behavior gen.MetaBehavior, options gen.MetaOptions) (gen.Alias, error)type MetaBehavior interface {

// callback method for main goroutine

Start(process MetaProcess) error

// callback methods for the auxiliary goroutine

HandleMessage(from PID, message any) error

HandleCall(from PID, ref Ref, request any) (any, error)

Terminate(reason error)

HandleInspect(from PID) map[string]string

}createMyMeta(conn net.Conn) gen.MetaBehavior {

return &myMeta{

conn: conn,

}

}

type myMeta struct {

gen.MetaProcess // Embedding the gen.MetaProcess interface

conn net.Conn. // Network connection

}

func (mm *myMeta) Start(meta gen.MetaProcess) error {

// Assign the meta-process instance to the embedded interface

mm.MetaProcess = meta

// Ensure that the socket is closed upon exit

defer close(mm.conn)

// Read data from the socket in a loop

for {

buf := make([]byte, 1024)

n, err mm.conn.Read(buf)

if err != nil {

return err // Terminate the meta-process on error

}

// Send the data to the parent process

mm.Send(mm.Parent(), buf[:n])

}

return nil // meta-process terminated

}func (mm *myMeta) HandleMessage(from gen.PID, message any) error {

// Log information about the parent process

mm.Log().Info("my parent process is %s", mm.Parent())

// Handle received messages as byte slice

if data, ok := message.([]byte); ok {

// Write the data back to the connection

mm.conn.Write(data)

return nil

}

// Log if the message is of an unknown type

mm.Log().Info("got unknown message %#v", message)

return nil

}

func (mm *myMeta) Terminate(reason error) {

// Close the connection when the meta-process is terminated

close(mm.conn)

}type MetaProcess interface {

ID() Alias // Returns the ID (gen.Alias) of this meta-process

Parent() PID // Returns the PID of the parent process

Send(to any, message any) error // Sends an asynchronous message to another process or meta-process

Spawn(behavior MetaBehavior, options MetaOptions) (Alias, error) // Spawns a new meta-process

Log() Log // Provides the gen.Log interface for logging

}import (

"ergo.services/ergo"

"ergo.services/ergo/gen"

)

func main() {

name := "example@localhost"

// Start node. Returns gen.Node interface

node, err := ergo.StartNode(name, opts)

if err != nil {

panic(err)

}

node.Wait()

}EnableSpawn(name gen.Atom, factory gen.ProcessFactory, nodes ...gen.Atom) error

DisableSpawn(name gen.Atom, nodes ...gen.Atom) errorSpawn(name gen.Atom, options gen.ProcessOptions, args ...any) (gen.PID, error)

SpawnRegister(register gen.Atom, name gen.Atom, options gen.ProcessOptions, args ...any) (PID, error)RemoteSpawn(node gen.Atom, name gen.Atom, options gen.ProcessOptions, args ...any) (gen.PID, error)

RemoteSpawnRegister(node gen.Atom, name gen.Atom, register gen.Atom, options gen.ProcessOptions, args ...any) (gen.PID, error)ApplicationStartTemporary(name gen.Atom, options gen.ApplicationOptions) error

ApplicationStartTransient(name gen.Atom, options gen.ApplicationOptions) error

ApplicationStartPermanent(name gen.Atom, options gen.ApplicationOptions) errorgen.EventOptionsNotify: This flag controls whether the producer should be notified about the presence or absence of subscribers for the event. If notifications are enabled, the process will receive a gen.MessageEventStart message when the first subscriber appears and a gen.MessageEventStop message when the last subscriber unsubscribes. This allows the producer to generate events only when there are active subscribers. If the event is registered using the RegisterEvent method of the gen.Node interface, this field is ignored.

Buffer: This specifies how many of the most recent events should be stored in the event buffer. If this option is set to zero, buffering is disabled.

The RegisterEvent function returns a token of type gen.Ref upon success. This token is used to generate events. Only the process that owns the event, or a process that has been delegated the token by the event owner, can produce events for that registration.

To generate events, the gen.Process interface provides the SendEvent method. This method accepts the following arguments:

name: The name of the registered event (gen.Atom).

token: The key obtained during the event registration (gen.Ref).

message: The event payload, which can be of any type.

To generate events, the gen.Node interface provides the SendEvent method. This method is similar to the SendEventmethod in the gen.Process interface but includes an additional parameter, gen.MessageOptions. This extra parameter allows for further customization of how the event message is sent, such as setting priority, compression, or other message-related options.

To subscribe to a registered event, the gen.Process interface provides the following methods:

LinkEvent: This method creates a link to a gen.Event. The process will receive an exit signal if the producer process of the event terminates or if the event is unregistered. To remove the link, use the UnlinkEvent method. When the link is created, the method returns a list of the most recent events from the producer's buffer.

MonitorEvent: This method creates a monitor on a gen.Event. The process will receive a gen.MessageDownEventif the producer process terminates or if the event is unregistered. If the event is unregistered, the Reason field in gen.MessageDownEvent will contain gen.ErrUnregistered. To remove the monitor, use the DemonitorEventmethod.

Both methods accept an argument of type gen.Event. For an event registered on the local node, you only need to specify the Name field, and you can leave the Node field empty. This simplifies subscribing to local events while still providing flexibility for handling events from remote nodes.

Upon successfully creating a link or monitor, the function returns a list of events (gen.MessageEvent) provided by the producer process from its message buffer. Each event contains the following information: the event name (gen.Event), the timestamp of when the event was generated (obtained using time.Now().UnixNano()), and the actual event value sent by the producer process. If the list of events is empty, it means that either the producer process has not yet generated any events or the producer registered the event with a zero-sized buffer.

For an example demonstrating the capabilities of the events mechanism, you can refer to the events project in the ergo-services/examples repository:

gen.Atomgen.PIDgen.ProcessIDgen.Refgen.Aliasgen.EventSets the format for the timestamp of log messages. You can use any existing format (see time package) or define your own. By default, the time is displayed in nanoseconds

ShortLevelName Displays the shortened name of the log level

IncludeBehavior Includes the name of the process behavior in the log message

IncludeName includes the registered name of the process in the log message

To handle HTTP-requests

The act.WebWorker actor implements the low-level gen.ProcessBehavior interface and allows HTTP requests to be handled as asynchronous messages. It is designed to be used with a web server (refer to the Web section in meta-processes). To launch a process based on act.WebWorker, you need to embed it in your object and implement the required methods from the act.WebWorkerBehavior interface.

Example:

type MyWebWorker struct {

act.WebWorker

}

func factoryMyWebWorker() gen.ProcessBehavior {

return &MyWebWorker{}

}To work with your object, act.WebWorker uses the act.WebWorkerBehavior interface. This interface defines a set of callback methods:

All methods in the act.WebWorkerBehavior interface are optional for implementation.

It is most efficient to use act.WebWorker in combination with act.Pool for load balancing when handling HTTP requests:

An example implementation of a web server using act.WebWorker and act.Pool for load distribution when handling HTTP requests can be found in the repository at , the project demo.

This package implements the gen.LoggerBehavior interface and provides the capability to log messages to a file, with support for log file rotation at a specified interval.

Period Specifies the rotation period (minimum value: time.Minute)

TimeFormat Sets the format for the timestamp in log messages. You can choose any existing format (see ) or define your own. By default, timestamps are in nanoseconds

IncludeBehavior includes the process behavior in the log

IncludeName includes the registered process name

ShortLevelName enables shortnames for the log levels

Path directory for the log files, default: ./log

Prefix defines the log files name prefix (<Path>/<Prefix>.YYYYMMDDHHMi.log[.gz])

Compress Enables gzip compression for log files

Depth Specifies the number of log files in rotation

Managing outgoing connections

When creating an outgoing connection to a remote node, the node first checks the internal Static Routing Table for an existing route. If no static route is found for the specified remote node's name, the node uses the Registrar and sends a resolve request to obtain the necessary connection parameters (see the Service Discovery section for more information).

To define a static route for a specific node or group of nodes, use the AddRoute method of the gen.Network interface. This method allows you to manually configure the connection parameters for outgoing connections, ensuring more control over how the node establishes connections with the specified nodes, bypassing the need to query the Registrar.

match: defines the name of the remote node or a pattern to match multiple nodes. The node uses the MatchStringmethod from Go's standard library regexp package to match the node names based on this pattern.

route: specifies the connection parameters to be used when establishing a connection to the matched node(s). This includes details such as the network protocol, port, TLS settings, and other connection options.

weight: defines the weight of the specified route. If multiple routes match the same node name in the routing table, the node will return a list of routes sorted by descending weight. The node will then use the route with the highest weight to establish the connection.

To check for the existence of a static route for a specific remote node name, use the Route method of the gen.Networkinterface. To remove a static route, use the RemoveRoute method of the gen.Network interface, specifying the match value that was used when adding the route.

The route argument in the AddRoute method allows you to specify detailed connection parameters for establishing outgoing connections to remote nodes. This enables fine-grained control over how connections are created:

Resolver: specifies a particular Registrar to be used by the node for resolving the connection. This interface is obtained via the Resolver method of the gen.Registrar interface. This functionality is useful when a node is working with multiple network stacks. For example, see the section for managing clusters with different network stacks simultaneously.

Route: allows you to explicitly define the host name, port number, TLS mode, handshake version, and protocol version. If the Resolver parameter is explicitly set, the route parameters provided will override any route information returned by the Registrar.

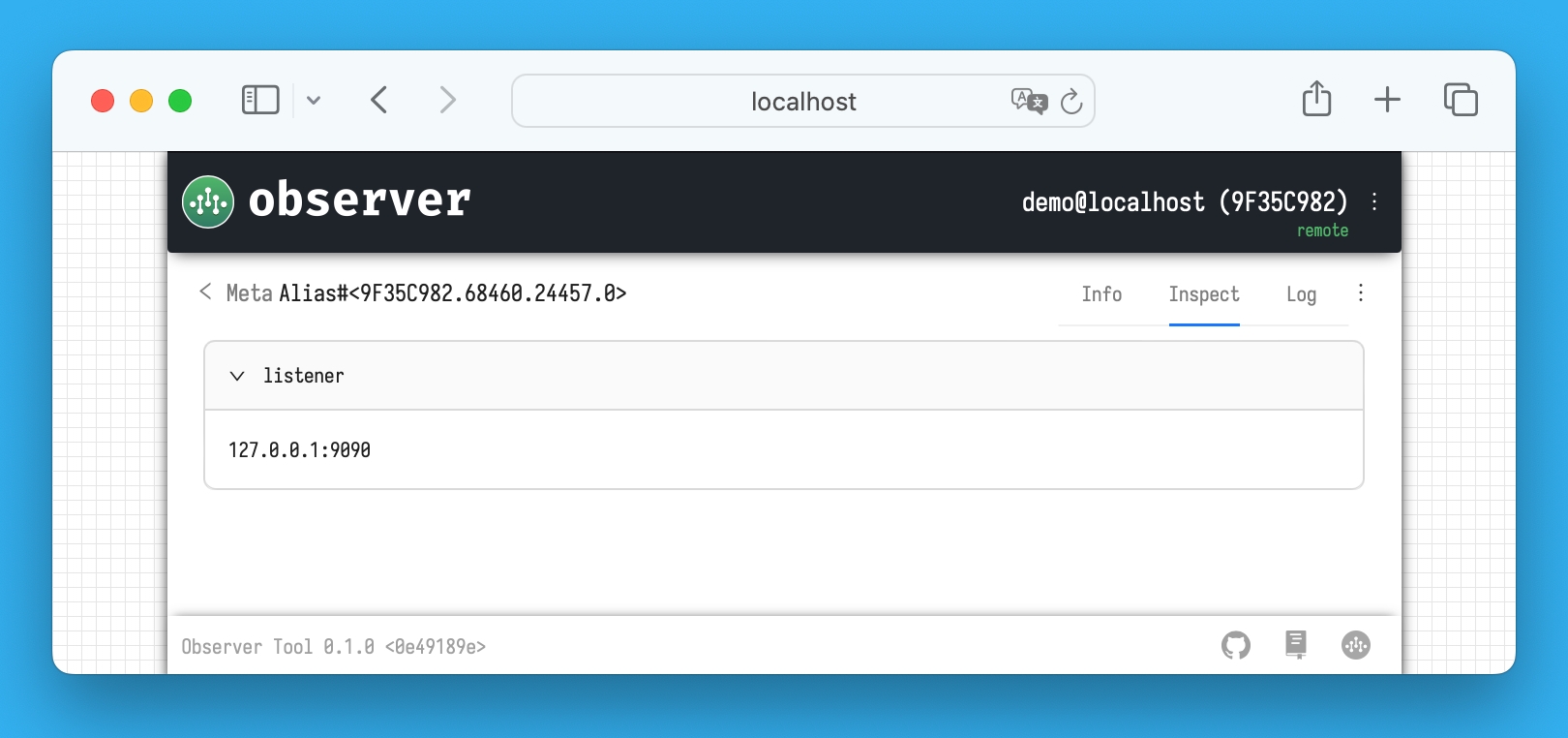

To handle synchronous HTTP requests with the actor model in Ergo Framework, they must be converted into asynchronous messages. For this purpose, two meta-processes have been created: meta.WebServer and meta.WebHandler. The first is used to start an HTTP server, and the second transforms synchronous HTTP requests into messages that are sent to a worker process. A worker process can be created using act.WebWorker, which processes the incoming messages asynchronously.

You can create a meta.WebHandler

type myActor {

act.Actor

}

...

func (a *myActor) HandleMessage(from gen.PID, message any) error {

...

// local event

event := gen.Event{Name: "exampleEvent"}

lastEvents, err := a.LinkEvent(event)

...

// remote event

event := gen.Event{Name: "remoteEvent", Node: "remoteNode"}

lastEvents, err := a.LinkEvent(event)

}package main

import (

"ergo.services/ergo"

"ergo.services/ergo/gen"

"ergo.services/logger/colored"

)

func main() {

logger := gen.Logger{

Name: "colored",

Logger: colored.CreateLogger(colored.Options{}),

}

nopt := gen.NodeOptions{}

nopt.Log.Loggers = []gen.Logger{logger}

// disable default logger to get rid of duplicating log-messages

nopt.Log.DefaultLogger.Disable = true

node, err := ergo.StartNode("demo@localhost", nopt)

if err != nil {

panic(err)

}

node.Log().Warning("Hello World!!!")

node.Wait()

}type WebWorkerBehavior interface {

gen.ProcessBehavior

// Init invoked on a spawn WebWorker for the initializing.

Init(args ...any) error

// HandleMessage invoked if WebWorker received a message sent with gen.Process.Send(...).

// Non-nil value of the returning error will cause termination of this process.

// To stop this process normally, return gen.TerminateReasonNormal

// or any other for abnormal termination.

HandleMessage(from gen.PID, message any) error

// HandleCall invoked if WebWorker got a synchronous request made with gen.Process.Call(...).

// Return nil as a result to handle this request asynchronously and

// to provide the result later using the gen.Process.SendResponse(...) method.

HandleCall(from gen.PID, ref gen.Ref, request any) (any, error)

// Terminate invoked on a termination process

Terminate(reason error)

// HandleEvent invoked on an event message if this process got subscribed on

// this event using gen.Process.LinkEvent or gen.Process.MonitorEvent

HandleEvent(message gen.MessageEvent) error

// HandleInspect invoked on the request made with gen.Process.Inspect(...)

HandleInspect(from gen.PID, item ...string) map[string]string

// HandleGet invoked on a GET request

HandleGet(from gen.PID, writer http.ResponseWriter, request *http.Request) error

// HandlePOST invoked on a POST request

HandlePost(from gen.PID, writer http.ResponseWriter, request *http.Request) error

// HandlePut invoked on a PUT request

HandlePut(from gen.PID, writer http.ResponseWriter, request *http.Request) error

// HandlePatch invoked on a PATCH request

HandlePatch(from gen.PID, writer http.ResponseWriter, request *http.Request) error

// HandleDelete invoked on a DELETE request

HandleDelete(from gen.PID, writer http.ResponseWriter, request *http.Request) error

// HandleHead invoked on a HEAD request

HandleHead(from gen.PID, writer http.ResponseWriter, request *http.Request) error

// HandleOptions invoked on an OPTIONS request

HandleOptions(from gen.PID, writer http.ResponseWriter, request *http.Request) error

}

AddRoute(match string, route gen.NetworkRoute, weight int) errorCookie: overrides the default Cookie value specified in gen.NetworkOptions for the given route, providing custom authentication or security settings specific to the connection.

Cert: specifies a CertManager to establish a TLS connection, ensuring that the appropriate certificates are used during the connection.

Flags: you can override specific flags for the given route. For example, you can explicitly disable certain mechanisms, such as preventing the remote node from spawning processes over the established connection.

AtomMapping: enables automatic substitution of gen.Atom values transmitted or received during the connection.

LogLevel: defines the logging level for the network stack within the established connection. This provides granular control over the verbosity of log messages for troubleshooting or monitoring network activity on a per-connection basis

package main

import (

"time"

"ergo.services/ergo"

"ergo.services/ergo/gen"

"ergo.services/logger/rotate"

)

func main() {

var options gen.NodeOptions

ropt := rotate.Options{Period: time.Minute, Compress: false}

rlog, err := rotate.CreateLogger(ropt)

if err != nil {

panic(err)

}

logger := gen.Logger{

Name: "rotate",

Logger: rlog,

}

options.Log.Loggers = append(options.Log.Loggers, logger)

node, err := ergo.StartNode("demo@localhost", options)

if err != nil {

panic(err)

}

node.Wait()

}meta.CreateWebHandlergen.MetaProcessBehaviorhttp.HandlerIt takes meta.WebHandlerOptions as an argument:

Process: The name of the process to which transformed asynchronous meta.MessageWebRequest messages are sent. If not specified, these messages are sent to the parent meta-process.

RequestTimeout: The time allowed for the process to handle the message, with a default of 5 seconds.

After successful creation, you need to start the meta-process using SpawnMeta from the gen.Process interface.

Once the meta-process is running, it can be used as an HTTP request handler

To create the meta.WebServer meta-process, use the meta.CreateWebServer function with the meta.WebServerOptions argument. These options allow you to set:

Host: The interface on which the port will be opened for handling HTTP requests.

Port: The port number.

CertManager: Enables TLS encryption for the HTTP server. You can use the node's CertManager to activate the node's certificate by using the CertManager() method of the gen.Node interface.

Handler: Specifies the HTTP request handler.

When the meta-process is created, the HTTP server starts. If the server fails to start, meta.CreateWebServer returns an error. After successful creation, start the meta-process using SpawnMeta(...) from the gen.Process interface.

Example:

Example can be found in the repository at https://github.com/ergo-services/examples, specifically in the demo project.

//

// WebWorker

//

func factory_MyWebWorker() gen.ProcessBehavior {

return &MyWebWorker{}

}

type MyWebWorker struct {

act.WebWorker

}

// Handle GET requests.

func (w *MyWebWorker) HandleGet(from gen.PID, writer http.ResponseWriter, request *http.Request) error {

w.Log().Info("got HTTP request %q", request.URL.Path)

w.WriteHeader(http.StatusOK)

return nil

}

//

// Pool of workers

//

type MyPool struct {

act.Pool

}

func factory_MyPool() gen.ProcessBehavior {

return &MyPool{}

}

// Init invoked on a spawn Pool for the initializing.

func (p *MyPool) Init(args ...any) (act.PoolOptions, error) {

opts := act.PoolOptions{

WorkerFactory: factory_MyWebWorker,

}

p.Log().Info("started process pool of MyWebWorker with %d workers", opts.PoolSize)

return opts, nil

}"Pool of Workers" design pattern

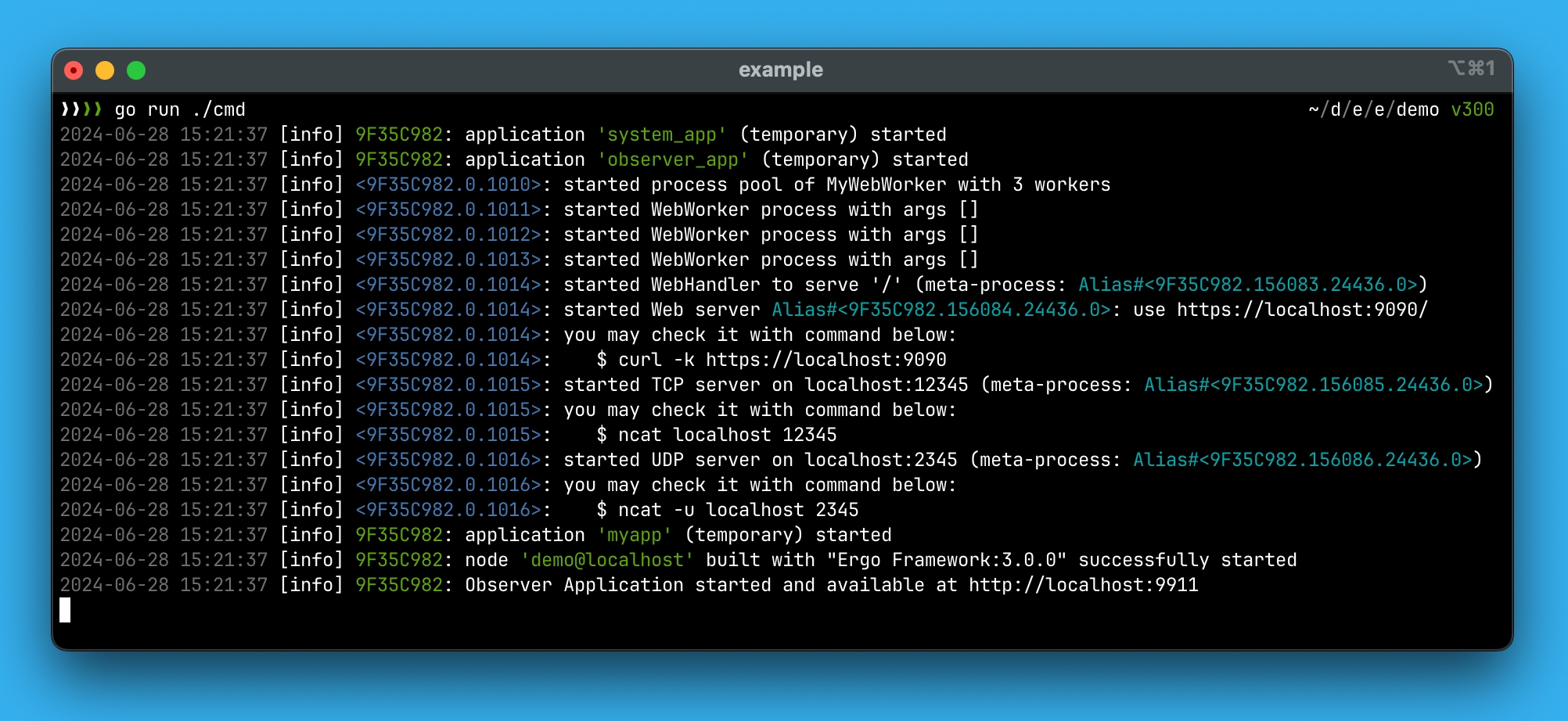

The act.Pool actor implements the low-level gen.ProcessBehavior interface and provides the "Pool of Workers" design pattern functionality. All asynchronous messages and synchronous requests sent to the pool process are redirected to worker processes in the pool.

To launch a process based on act.Pool, you need to embed it in your object. For example:

act.Pool uses the act.PoolBehavior interface to interact with your object:

The Init method is mandatory for implementation in act.PoolBehavior, while the other methods are optional. Similar to act.Actor, act.Pool has the embedded gen.Process interface, allowing you to use its methods directly from your object:

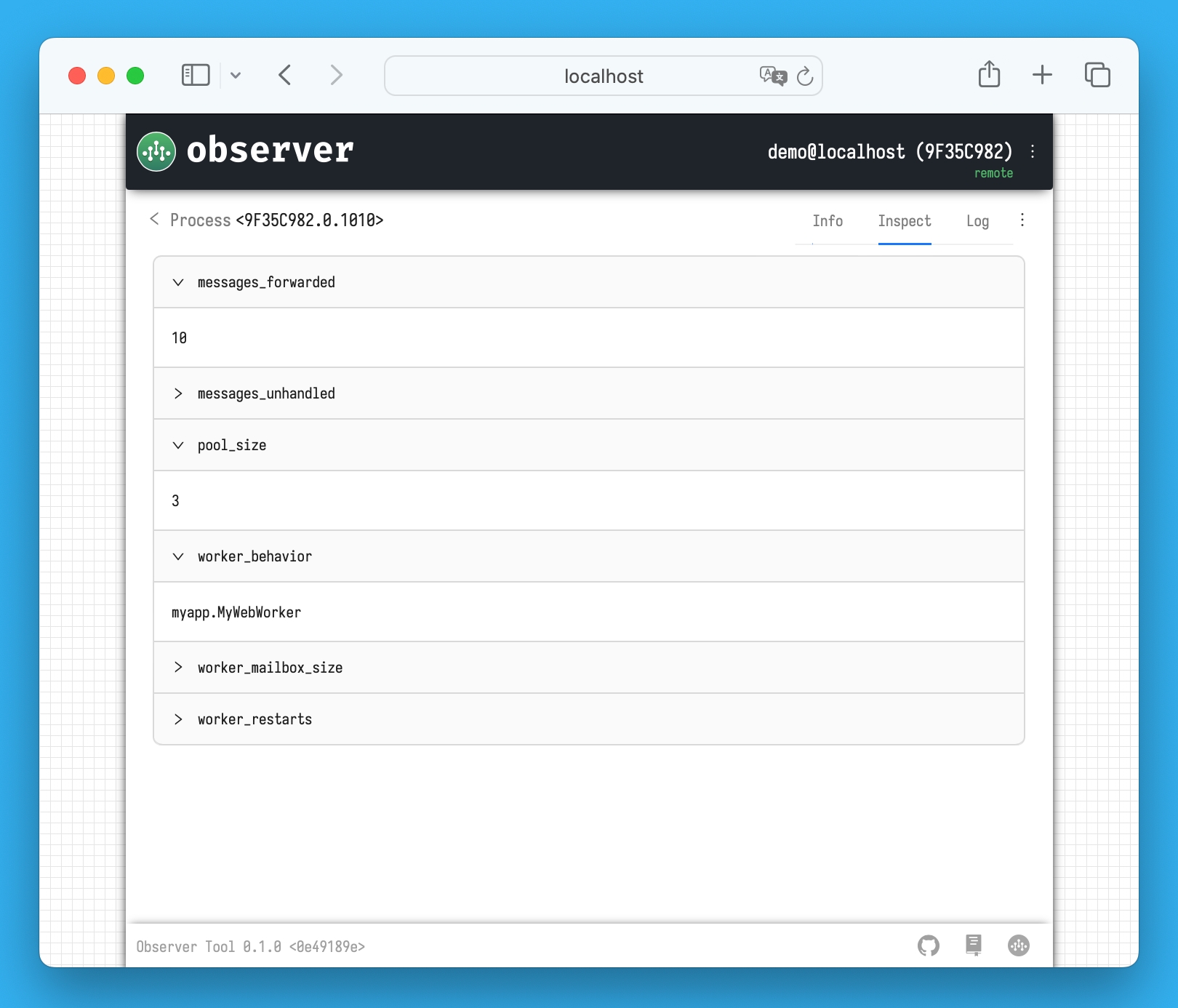

With act.PoolOptions, you can configure various parameters for the pool, such as:

PoolSize: Determines the number of worker processes to be launched when your pool process starts. If this option is not set, the default value of 3 is used.

WorkerFactory: A factory function that creates worker processes.

WorkerMailboxSize: Specifies the size of the mailbox for each worker process.

All asynchronous messages and synchronous requests received in the pool process's mailbox are automatically forwarded to worker processes, distributing the load evenly. The load is distributed using a FIFO queue of worker processes.

Message/Request Handling Algorithm:

Select a worker from the FIFO queue.

Forward the message/request.

If errors like gen.ErrProcessUnknown or gen.ErrProcessTerminated occur, a new worker is started, and the message is sent to it.

If all workers are busy (e.g., due to gen.ErrMailboxFull), the pool process logs an error, such as "no available worker process. ignored message from <PID>."

Forwarding to workers only happens for messages from the Main queue. To ensure a message is handled by the pool process itself, send it with a priority higher than gen.MessagePriorityNormal. The gen.Process interface provides SendWithPriority and CallWithPriority methods for sending messages or making synchronous requests with a specified priority.

To dynamically manage the number of worker processes, act.Pool provides the following methods:

AddWorkers: This method adds a specified number of worker processes to the pool. Upon successful execution, it returns the total number of worker processes in the pool after the addition.

RemoveWorkers: This method stops and removes a specified number of worker processes from the pool. Upon successful execution, it returns the total number of worker processes remaining in the pool after the removal.

Linking and Monitoring Mechanisms

These mechanisms allow processes to respond to termination events of targets with which links or monitors have been created. Such targets can include:

A process identifier (gen.PID)

A process name (gen.Atom or gen.ProcessID)

A process alias (gen.Alias)

An event (gen.Event)

A node name (gen.Atom, in the case of monitoring a node connection)

The key difference between linking and monitoring lies in the response to the termination event of the target. When a process creates a link with a target, and that target terminates, the linked process receives an exit signal, which generally causes it to terminate as well.

In contrast, when a process creates a monitor with a target, and the target terminates, the monitoring process receives a gen.MessageDown* message, which contains the reason for the target's termination. This notification allows the monitoring process to react accordingly without necessarily terminating itself.

In Ergo Framework, links are created between processes to ensure that termination events of one process propagate to linked processes. The gen.Process interface provides the following methods for creating and managing links:

Link: This universal method allows you to create a link to a target, which can be a gen.Atom (for a local process), gen.PID, gen.ProcessID, or gen.Alias. The process will receive an exit signal upon the target process's termination. To remove the link, use the Unlink method with the same argument.

Exit signals are always delivered with the highest priority and placed in the Urgent queue of the process's mailbox.

Monitors allow a process to observe the lifecycle of a target process or event. The gen.Process interface provides several methods for creating monitors:

Monitor: A universal method for creating a monitor. The target can be a gen.Atom (for a local process), gen.PID, gen.ProcessID, or gen.Alias. The monitoring process will receive a gen.MessageDown* message upon the termination of the target process. To remove the monitor, use the Demonitor method with the same argument.

Messages of type gen.MessageDown* are delivered with high priority and placed in the System queue of the process's mailbox.

Thanks to , the linking and monitoring mechanisms in Ergo Framework can be used with targets on remote nodes.

When a process creates a link or monitor with a remote target (such as a process, alias, or event), if the connection to the node where the target resides is lost, the process will receive an exit signal or a gen.MessageDown* message, depending on the mechanism used. In this case, the reason for termination will be gen.ErrNoConnection. This allows processes to handle network disconnections and remote target failures seamlessly, as part of the same fault-tolerant mechanisms used for local processes.

For working with TCP connections, two types of meta-processes are implemented in Ergo Framework:

TCP Server Process: Responsible for creating a TCP server. It opens the socket and handles incoming connections.

TCP Connection Handler: Manages established TCP connections, whether they are incoming or client-initiated

To create a TCP server meta-process, use the meta.CreateTCPServer function. It accepts meta.TCPServerOptions with the following parameters:

Host, Port: Specify the interface and port for incoming connections.

ProcessPool: Defines a list of worker processes to handle incoming connections. Each new connection is assigned a worker from the pool. If ProcessPool is not set, the parent process handles all connections. Using act.Pool in ProcessPool is not recommended to avoid packet mismanagement.

CertManager: Enables TLS encryption.

After creating the meta-process, you should start it with SpawnMeta from the gen.Process interface. Example:

If starting the meta-process fails for any reason, you need to free up the port specified in meta.TCPServerOptions.Port by using the Terminate method of the gen.MetaBehavior interface.

Upon an incoming connection, the TCP server meta-process creates and starts a new meta-process to handle that connection.

To create a client TCP connection, use the meta.CreateTCPConnection function. It initiates a TCP connection and, if successful, creates a meta-process to manage it.

The function takes meta.TCPConnectionOption, similar to meta.TCPServerOptions, but instead of ProcessPool, it includes a Process field for specifying a process to handle TCP packets. If this field is not used, all data packets are sent to the parent process.

After a successful connection, start the meta-process with SpawnMeta. If the process fails, close the connection using the Terminate method of the gen.MetaBehavior interface..

Example:

To handle a TCP connection, the meta-process communicates with either the parent or worker process based on the meta-process's launch options. Three types of messages are used:

meta.MessageTCPConnect: Sent when the meta-process starts, containing the connection ID (ID), RemoteAddr, and LocalAddr information.

meta.MessageTCPDisconnect: Sent when the TCP connection is disconnected, including cases where the meta-process is terminated.

If the meta-process cannot send a message to the worker process, the connection is terminated, and the meta-process stops working.

To send a message to a TCP connection, use the Send (or SendAlias) method from the gen.Process interface. The recipient should be the gen.Alias of the meta-process managing the connection. The message must be of type meta.MessageTCP. Note that the meta.MessageTCP.ID field is ignored during sending (it is only used for incoming messages).

For a detailed example of a TCP server implementation, you can check out the demo project in the repository: .

This package implements the gen.Registrar interface and serves as a client library for the central registrar, Saturn. In addition to the primary Service Discovery function, it automatically notifies all connected nodes about cluster configuration changes.

To create a client, use the Create function from the saturn package. The function requires:

The hostname where the central registrar is running (default port: 4499, unless specified in saturn.Options)

A token for connecting to Saturn

a set of options saturn.Options

Then, set this client in the gen.NetworkOption.Registrar options

Using saturn.Options, you can specify:

Cluster - The cluster name for your node

Port - The port number for the central Saturn registrar

KeepAlive - The keep-alive parameter for the TCP connection with Saturn

When the node starts, it will register with the central registrar in the specified cluster.

Additionally, this library registers a gen.Event and generates messages based on events received from the central Saturn registrar within the specified cluster. This allows the node to stay informed of any updates or changes within the cluster, ensuring real-time event-driven communication and responsiveness to cluster configurations:

saturn.EventNodeJoined - Triggered when another node is registered in the same cluster.

saturn.EventNodeLeft - Triggered when a node disconnects from the central registrar

saturn.EventApplicationLoaded - An application was loaded on a remote node. Use ResolveApplication from the gen.Resolver

To receive such messages, you need to subscribe to Saturn client events using the LinkEvent or MonitorEvent methods from the gen.Process interface. You can obtain the name of the registered event using the Event method from the gen.Registrar interface. This allows your node to listen for important cluster events like node joins, application starts, configuration updates, and more, ensuring real-time updates and handling of cluster changes.

Using the saturn.EventApplication* events and the feature, you can dynamically manage the functionality of your cluster. The saturn.EventConfigUpdate events allow you to adjust the cluster configuration on the fly without restarting nodes, such as updating the cookie value for all nodes or refreshing the TLS certificate. Refer to the section for more details.

You can also use the Config and ConfigItem methods from the gen.Registrar interface to retrieve configuration parameters from the registrar.

To get information about available applications in the cluster, use the ResolveApplication method from the gen.Resolver interface, which returns a list of gen.ApplicationRoute structures:

Name The name of the application

Node The name of the node where the application is loaded or running

Weight The weight assigned to the application in gen.ApplicationSpec

You can access the gen.Resolver interface using the Resolver method from the gen.Registrar interface.

Ergo Service Registry and Discovery

saturn is a tool designed to simplify the management of clusters of nodes created using the Ergo Framework. It offers the following features:

A unified registry for node registration within a cluster.

The ability to manage multiple clusters simultaneously.

The capability to manage the configuration of the entire cluster without restarting the nodes connected to Saturn (configuration changes are applied on the fly).

Notifications to all cluster participants about changes in the status of applications running on nodes connected to Saturn.

The source code of the saturn tool is available on the project's page: .

To install saturn, use the following command:

Available arguments:

host: Specifies the hostname to use for incoming connections.

port: Port number for incoming connections. The default value is 4499.

path: Path to the configuration file saturn.yaml

To start Saturn, a configuration file named saturn.yaml is required. By default, Saturn expects this file to be located in the current directory. You can specify a different location for the configuration file using the -path argument.

You can find an example configuration file in the project's Git repository.

The saturn.yaml configuration file contains two root elements:

Saturn: This section includes settings for the Saturn server.

You can configure the Token for access by remote nodes and specify certificate files for TLS connections.

By default, a self-signed certificate is used. For clients to accept this certificate, they must enable the InsecureSkipVerify option when creating the client.

If the name of a configuration element ends with the suffix .file, the value of that element is treated as a file. The content of this file is then sent to the nodes as a []byte.

To configure settings for all nodes in all clusters, use the Clusters section in the saturn.yaml configuration file. Here, you can define global settings that will apply to every node within every cluster managed by Saturn:

in this example:

Var1, Var2, Var3, and Var4 will be applied to all nodes in all clusters.

However, the value of Var1 for nodes named [email protected] in any cluster will be overridden with the value 456.

If nodes are registered without specifying a Cluster in saturn.Options, they become part of the general cluster. Configuration for the general cluster should be provided in the Cluster@ section

In the example above:

The variable Var1 is set to 789 for the general cluster (all nodes in the general cluster will receive Var1: 789).

However, for the node [email protected] within the general cluster, Var1 will be overridden to 456.

Thus, all nodes in the general cluster will inherit Var1: 789, except for [email protected], which will specifically have Var1: 456. Other nodes in the general cluster will retain the default values from the Cluster@ section unless they are explicitly overridden in the configuration.

To specify settings for a particular cluster, use the element name Cluster@<cluster name> in the configuration file:

Saturn can manage multiple clusters simultaneously, but resolve requests from nodes are handled only within their own cluster.

The name of a registered node must be unique within its cluster.

When a node registers, it informs the registrar which cluster it belongs to. Additionally, the node reports the applications running on it. Other nodes in the same cluster receive notifications about the newly connected node and its applications. Any changes in application statuses are also reported to the registrar, which in turn notifies all participants in the cluster.

For more details, see the section.

The network transparency of Ergo Framework allows processes to exchange messages regardless of whether they are running locally or on remote nodes. The process identifier (gen.PID, gen.ProcessID, gen.Alias) includes the name of the node where the process is running—this information is used by the node to route the message.

If a message is being sent to a process on a remote node, the Service Discovery mechanism helps determine how to establish a network connection to that node. Once the connection is established, the node automatically encodes the message and sends it to the remote node, which then delivers it to the recipient process's mailbox.

The Ergo Data Format (EDF) is used for data transmission within Ergo Framework.

The Ergo Data Format (EDF) supports encoding and decoding of all scalar types in Golang, as well as specialized types from Ergo Framework (see ). To encode custom types, they must be registered using the edf.RegisterTypeOf method from the ergo.services/ergo/net network stack. The registration of a custom type must occur on both the sender and receiver nodes.

If you're registering a data type that contains another custom type among its child elements, you must register the child type first before registering the parent type.

Example:

All child elements in the registered structures must be public. If you need to encode/decode structures with private fields, you will need to implement a custom encoder/decoder for that data type. To do this, you must implement the edf.Marshaler and edf.Unmarshaler interfaces.

These interfaces allow you to define how the custom type is marshaled (encoded) and unmarshaled (decoded), giving you control over the serialization and deserialization process for types with private fields or special requirements.

Example:

The length of a message encoded using the MarshalEDF method must not exceed 2^32 bytes.

In Golang, the error type is an interface. To transmit error types over the network, they also need to be registered using the edf.RegisterError method. A set of standard errors in Ergo Framework (such as gen.Err*, gen.TerminateReason*) is automatically registered when the node starts.

Additionally, the following limits are imposed on specific types:

gen.Atom: Maximum length of 255 bytes

string: Maximum length of 65,535 bytes (2^16)

binary: Maximum length of 2^32 bytes

When establishing a connection between nodes, during the handshake phase, the nodes exchange dictionaries containing registered data types and registered errors. These dictionaries are used to reduce the volume of transmitted information during message exchanges, as identifiers are used instead of the names of registered types and errors.

If you register a data type or an error after the connection is established, sending data of that type will result in an error. To make these newly registered types available for network exchange, you will need to disconnect and re-establish the connection.

The EDF format does not support pointers. The use of pointers should remain an internal optimization within your application and should not extend beyond it.

What is a Process in Ergo Framework

In Ergo Framework, a process is a lightweight entity that operates on top of a goroutine and is based on the actor model. Each process has a mailbox for receiving incoming messages. The size of the mailbox is determined by the gen.ProcessOptions.MailboxSize parameter when the process is started. By default, this value is set to zero, which makes the mailbox unlimited in size. Inside the mailbox, there are several queues—Main, System, Urgent, and Log—that prioritize the processing of messages.

Any running process can send messages and make synchronous calls to other processes, including those running on remote nodes. Additionally, a process can spawn new processes, both locally and on remote nodes (see ).